AVI Ingress Solution Elements

After setting up the AVI configuration now it’s time to move into the AVI Kubernetes Operator. AKO will communicate with AVI Controller using AKO and will realize for us the LoadBalancer and Ingress type services translating the desired state for this K8s services into AVI Virtual Services that will run in the external Service Engines. The AKO deployment consists of the following components:

- The AVI Controller

- Manages Lifecycle of Service Engines

- Provides centralized Analytics

- The Service Engines (SE)

- Host the Virtual Services for K8s Ingress and LoadBalancer

- Handles Virtual Services Data Plane

- The Avi Kubernetes Operator

- Provides Ingress-Controller capability within the K8s Cluster

- Monitor ingress and loadbalancer K8s objects and translates into AVI configuration via API

- Runs as a Pod in the K8S cluster

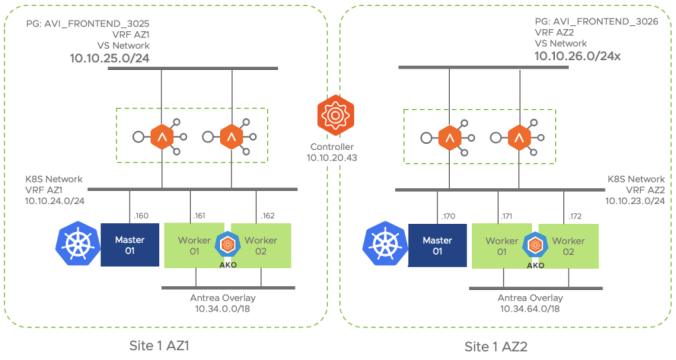

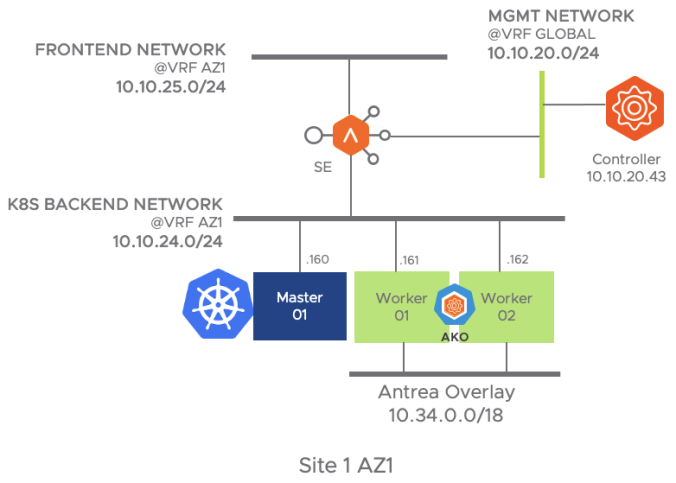

The following figure represent the network diagram for the different elements that made the AKO integration in the Site1 AZ1

Similarly the below diagram represent the Availability Zone 2. As you can notice the AVI Controller (Control/Management Plane) is shared between both AZs in the same Site whereas the Date Plane (i.e Service Engines) remains separated in different VMs and isolated from a network perspective.

I am using here a vanilla Kubernetes based on 1.18 release. Each cluster is made up by a single master and two worker nodes and we will use Antrea as CNI. Antrea is a cool kubernetes networking solution intended to be Kubernetes native. It operates at Layer3/4 to provide networking and security services for a Kubernetes cluster. You can find more information of Antrea and how to install it here. To install Antrea you need to assign a CIDR block to provide IP Addresses to the PODs. In my case I have selected two CIDR blocks as per the table below:

| Cluster Name | POD CIDR Block | CNI | # Master | # Workers |

| site1-az1 | 10.34.0.0/18 | Antrea | 1 | 2 |

| site1-az2 | 10.34.64.0/18 | Antrea | 1 | 2 |

Before starting, the cluster must be in a Ready status. We can check the current status of our k8s cluster using kubectl commands. To be able to operate a kubernetes cluster using kubectl command line you need a kubeconfig file that contains the authentication credentials needed to gain access via API to the desired cluster. An easy way to gain access is jumping into the Master node and assuming a proper kubeconfig file is at $HOME/.kube/config, you can check the status of your kubernetes cluster nodes at Site1 AZ1 using kubectl as shown below.

kubectl get nodes

NAME STATUS ROLES AGE VERSION

site1-az1-k8s-master01 Ready master 29d v1.18.10

site1-az1-k8s-worker01 Ready <none> 29d v1.18.10

site1-az1-k8s-worker02 Ready <none> 29d v1.18.10In a similar way you can ssh to the master node at Site1 AZ2 cluster and check the status of that particular cluster.

kubectl get nodes

NAME STATUS ROLES AGE VERSION

site1-az2-k8s-master01 Ready master 29d v1.18.10

site1-az2-k8s-worker01 Ready <none> 29d v1.18.10

site1-az2-k8s-worker02 Ready <none> 29d v1.18.10Understanding pod reachability

As mentioned the Virtual Service hosted in the Service Engines will act as the frontend for exposing our K8s external services. On the other hand, we need to ensure that the Service Engines reach the pod networks to complete the Data Path. Generally the pod network is a non-routable network used internally to provide pod-to-pod connectivity and therefore is not reachable from the outside. As you can imagine, we have to find the way to allow external traffic to come in to accomplish the Load Balancing function.

One common way to do this is to use a k8s feature called NodePorts. NodePort exposes the service on each Node’s IP at a static port and you can connect to the NodePort service outside the cluster by requesting <NodeIP>:<NodePort>. This is a fixed port to a service and it is in the range of 30000–32767. With this feature you can contact any of the workers in the cluster using the allocated port in order to reach the desired deployment (application) behind that exposed service. Note that you use NodePort without knowing where (i.e. in which worker node) the Pods for that service are actually running.

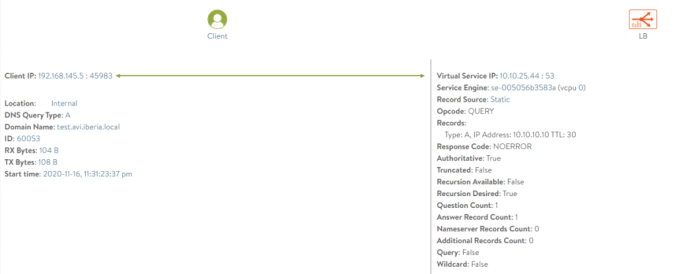

Having in mind how a NodePort works, now let’s try to figure out how our AVI External Load Balance would work in an environment in which we use NodePort to expose our applications. Imagine a deployment like the one represented in the below picture. As you can see there are two sample deployments: hackazon and kuard. The hackazon one has just one pod replica whereas the kuard deployment has two replicas. The k8s scheduler service has decided to place the pods as represented in the figure. On the top of the diagram you can see how our external Service Engine would expose the corresponding virtual services in the FrontEnd Network and creates a Server Pool made up by each of the NodePort services, in that case, for the hackazon.avi.iberia.local virtual service a three member server pool would be created distributing traffic to 10.10.24.161:32222, 10.10.24.162:32222 and 10.10.24.163:32222. As you can see the traffic would be distributed evenly across the pool regardless the actual Pod is running at Worker 01. On the other hand since the NodePort is just an abstraction of the actual Deployment, as long as one Pod is up and running the NodePort would appear to be up from a health-check perspective. The same would happen with the kuard.avi.iberia.local virtual service.

As you can see, the previous approach cannot take into account how the actual PODs behind this exposed service are distributed across the k8s cluster and can lead into inefficient east-west traffic among K8s worker nodes and also, since we are exposing a service and not the actual endpoint (the POD) we cannot take advantage of some interesting features such as POD health-monitoring or what sometimes is a requirement: server persistence.

Although NodePort based node-reachability is still an option. The AKO integration proposes another much better integration that overcomes previous limitations. Since the worker nodes are able to forward IPv4 packets and because the CNI knows the IP Addressing range assigned to every K8s node we can predict the full range of IP Addresses the POD will take once created.

You can check the CIDR block that Antrea CNI solution has allocated to each of the Nodes in the cluster using kubectl describe

kubectl describe node site1-az1-k8s-worker01

Name: site1-az1-k8s-worker01

Roles:

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=site1-az1-k8s-worker01

kubernetes.io/os=linux

Addresses:

InternalIP: 10.10.24.161

Hostname: site1-az1-k8s-worker01

< ... skipped output ... >

PodCIDR: 10.34.2.0/24

PodCIDRs: 10.34.2.0/24

< ... skipped output ... >Another fancy way to get this info is by using json format. Using jq tool you can parse the output and get the info you need using a single-line command like this:

kubectl get nodes -o json | jq '[.items[] | {name: .metadata.name, podCIDRS: .spec.podCIDR, NodeIP: .status.addresses[0].address}]'

[

{

"name": "site1-az1-k8s-master01",

"podCIDRS": "10.34.0.0/24",

"NodeIP": "10.10.24.160"

},

{

"name": "site1-az1-k8s-worker01",

"podCIDRS": "10.34.2.0/24",

"NodeIP": "10.10.24.161"

},

{

"name": "site1-az1-k8s-worker02",

"podCIDRS": "10.34.1.0/24",

"NodeIP": "10.10.24.162"

}

]To sum up, in order to achieve IP reachability to the podCIDR network the idea is to create a set of static routes using the NodeIP as next-hop to reach the assigned PodCIDR for every individual kubernetes node. Something like a route to 10.34.2.0/24 pointing to the next-hop 10.10.24.161 to reach PODs at site1-az1-k8s-worker01 and so on. Of course one of the AKO functions is to achieve this in a programatic way so this will be one of their first actions the AKO operator will perform at bootup.

AVI Kubernetes Operator (AKO) Installation

AKO will run as a pod on a dedicated namespace that we will create called avi-system. Currently the AKO is packaged as a Helm chart. Helm uses a packaging format for creating kubernetes objects called charts. A chart is a collection of files that describe a related set of Kubernetes resources. We need to install helm prior to deploy AKO.

There are different methods to install Helm. Since I am using ubuntu here I will use the snap package manager method which is the easiest.

sudo snap install helm --classic

helm 3.4.1 from Snapcrafters installedThe next step is add the AVI AKO repository that include the AKO helm chart using into our local helm.

helm repo add ako https://projects.registry.vmware.com/chartrepo/ako "ako" has been added to your repositoriesNow we can search the available helm charts at the repository just added before as shown below.

helm search repo

NAME CHART VERSION APP VERSION DESCRIPTION

ako/ako 1.4.2 1.4.2 A helm chart for AKO

ako/ako-operator 1.3.1 1.3.1 A Helm chart AKOO

ako/amko 1.4.1 1.4.1 A helm chart for AMKONext step is to create a new k8s namespace named avi-system in which we will place the AKO Pod.

kubectl create namespace avi-system

namespace/avi-system createdWe have to pass some configuration to the AKO Pod. This is done by means of a values.yaml file in which we need to populate the corresponding configuration parameters that will allow AKO to communicate with AVI Controller among other things. The full list of values and description can be found here. You can get a default values.yaml file using following commands:

helm show values ako/ako --version 1.4.2 > values.yamlNow open the values.yaml file and change the values as showed in below table to match with our particular environment in Site 1 AZ1 k8s cluster. You can find my values.yaml file I am using here just for reference.

| Parameter | Value | Description |

| AKOSettings.disableStaticRouteSync | false | Allow the AKO to create static routes to achieve POD network connectivity |

| AKOSettings.clusterName | S1-AZ1 | A descriptive name for the cluster. Controller will use this value to prefix related Virtual Service objects |

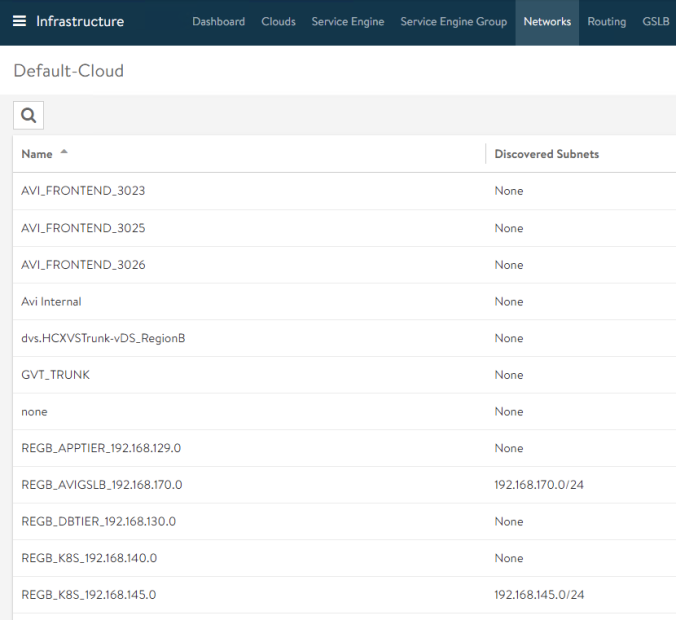

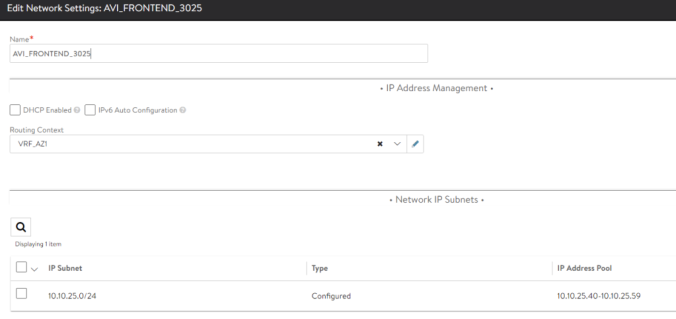

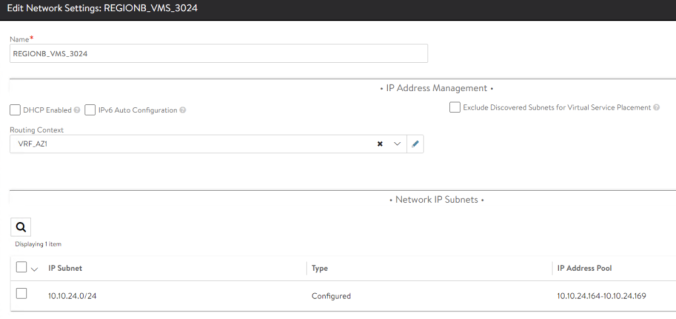

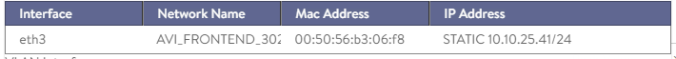

| NetworkSettings.subnetIP | 10.10.25.0 | Network in which create the Virtual Service Objects at AVI SE. Must be in the same VRF as the backend network used to reach k8s nodes. It must be configured with a static pool or DHCP to allocate IP address automatically. |

| NetworkSettings.subnetPrefix | 24 | Mask lenght associated to the subnetIP for Virtual Service Objects at SE. |

| NetworkSettings.vipNetworkList: – networkName | AVI_FRONTEND_3025 | Name of the AVI Network object hat will be used to place the Virtual Service objects at AVI SE. |

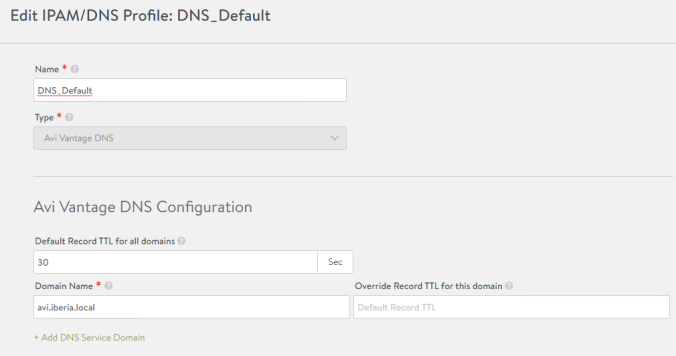

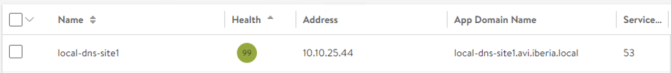

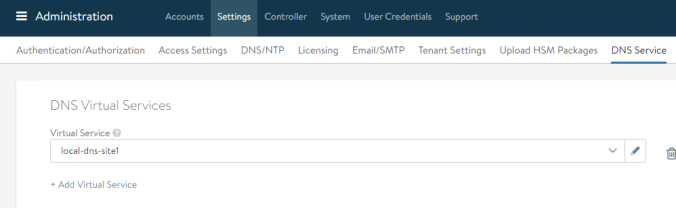

| L4Settings.defaultDomain | avi.iberia.local | This domain will be used to place the LoadBalancer service types in the AVI SEs. |

| ControllerSettings.serviceEngineGroupName | S1-AZ1-SE-Group | Name of the Service Engine Group that AVI Controller use to spin up the Service Engines |

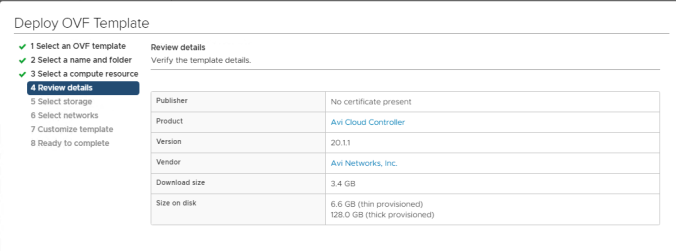

| ControllerSettings.controllerVersion | 20.1.2 | Controller API version |

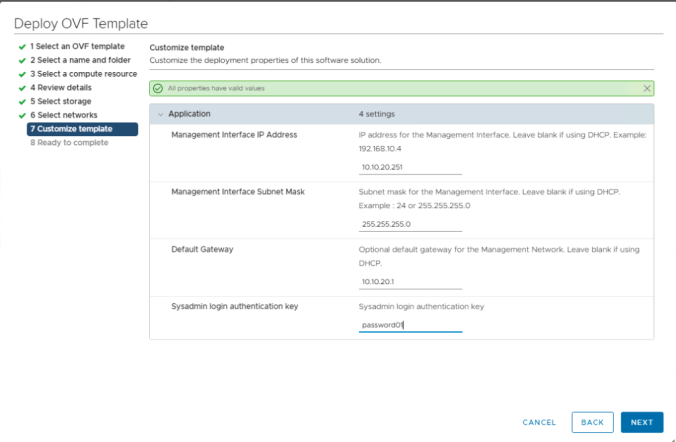

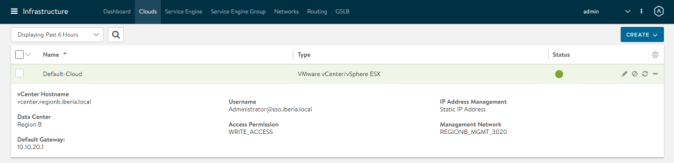

| ControllerSettings.controllerIP | 10.10.20.43 | IP Address of the AVI Controller |

| avicredentials.username | admin | Username to get access to the AVI Controller |

| avicredentials.password | password01 | Password to get access to the AVI Controller |

Save the values.yaml in a local file and next step is to install the AKO component through helm. Add the version and the values.yaml as input parameters. We can do it that way:

helm install ako/ako --generate-name --version 1.4.2 -f values.yaml -n avi-system

NAME: ako-1605611539

LAST DEPLOYED: Tue Jun 06 12:12:20 2021

NAMESPACE: avi-system

STATUS: deployed

REVISION: 1We can list the deployed chart using helm CLI list command within the avi-system namespace

helm list -n avi-system

NAME NAMESPACE REVISION STATUS CHART APP

ako-1605611539 avi-system 1 deployed ako-1.4.2 1.4.2This chart will create all the k8s resources needed by AKO to perform its functions. The main resource is the pod. We can check the status of the AKO pod using kubectl commands.

kubectl get pods -n avi-system

NAME READY STATUS RESTARTS AGE

ako-0 1/1 Running 0 5m45sIn case we experience problems (e.g Status is stuck in ContainerCreating or Restars shows a large number of restarts) we can always use standard kubectl commands such as kubectl logs or kubectl describe pod for troubleshooting and debugging.

If we need to update the values.yaml we must delete and recreate the ako resources by means of helm. I have created a simple restart script that can be found here named ako-reload.sh that lists the existing ako helm deployed release, deletes it and recreates using the values.yaml file in the current directory. This is helpful to save some time and also to stay up to date with the last application version because it will update the AKO and choose the most recent version of ako component in the AKO repository. The values.yaml file must be in the same path to make it works.

#!/bin/bash

# Update helm repo f AKO version

helm repo add ako https://projects.registry.vmware.com/chartrepo/ako

helm repo update

# Get newest AKO APP Version

appVersion=$(helm search repo | grep ako/ako | grep -v operator | awk '{print $3}')

# Get Release number of current deployed chart

akoRelease=$(helm list -n avi-system | grep ako | awk '{print $1}')

# Delete existing helm release and install a new one

helm delete $akoRelease -n avi-system

helm install ako/ako --generate-name --version $appVersion -f values.yaml --namespace avi-systemMake the script executable and simply run it each time you want to refresh the AKO installation. If this is not the first time we execute the script note how the first message warn us that the repo we are adding was already added, just ignore it.

chmod +x ako_reload.sh

"ako" already exists with the same configuration, skipping

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "ako" chart repository

Update Complete. ⎈Happy Helming!⎈

release "ako-1622738990" uninstalled

NAME: ako-1623094629

LAST DEPLOYED: Mon Jun 7 19:37:11 2021

NAMESPACE: avi-system

STATUS: deployed

REVISION: 1To verify that everything is running properly and that the communication with AVI controller has been successfully established we can check if the static routes in the VRF has been populated to attain required pod reachability as mentioned before. It is interesting to debug the AKO application using standard kubectl logs in order to see how the different events and API calls occur.

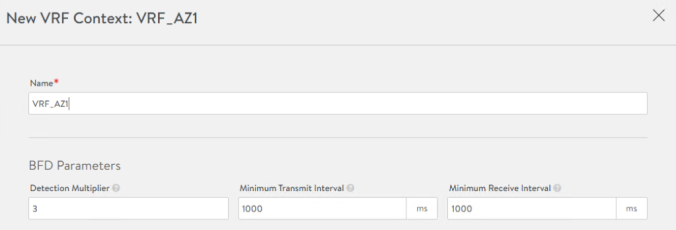

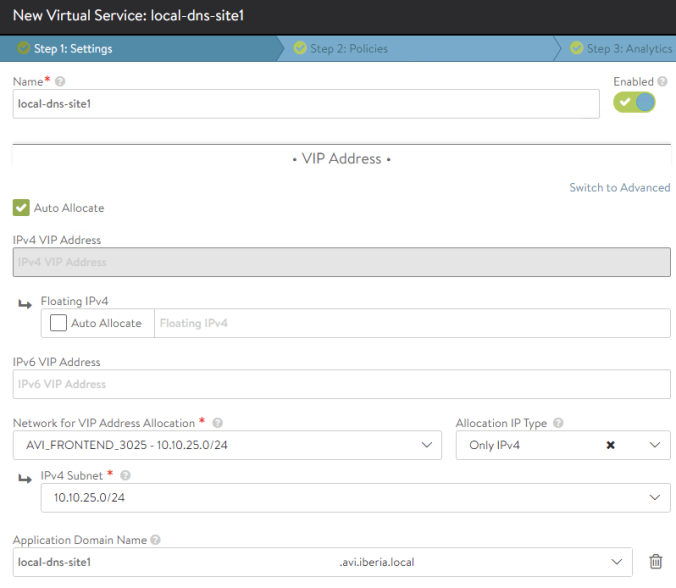

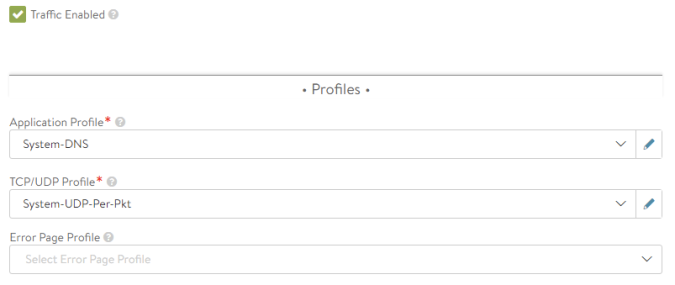

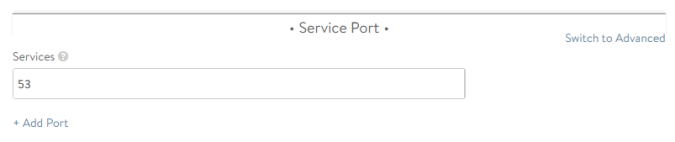

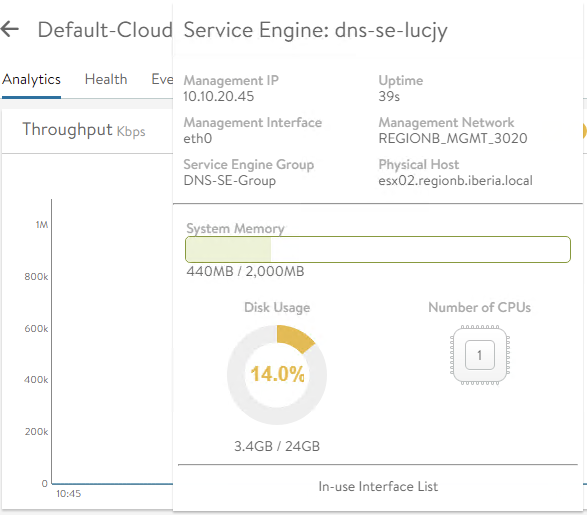

For example, we can see how in the first step AKO discovers the AVI Controller infrastructure and the type of cloud integration (vCenter). It also discovers VRF in which it has to create the routes to achieve Pod reachability. In this case the VRF is inferred from the properties of the selected AVI_FRONTEND_3025 network (remember this is the parameter NetworkSettings.VipNetworkList we have used in our values.yaml configuration file) at AVI Controller and correspondes to VRF_AZ1 as shown below:

kubectl logs -f ako-0 -n avi-system

INFO cache/controller_obj_cache.go:2558

Setting cloud vType: CLOUD_VCENTER

INFO cache/controller_obj_cache.go:2686

Setting VRF VRF_AZ1 found from network AVI_FRONTEND_3025A little bit down we can see how the AKO will create the static routes in the AVI Controller to obtain POD reachability in that way.

INFO nodes/avi_vrf_translator.go:64 key: Node/site1-az1-k8s-worker02, Added vrf node VRF_AZ1

INFO nodes/avi_vrf_translator.go:65 key: Node/site1-az1-k8s-worker02, Number of static routes 3As you can guess, now the AVI GUI should reflect this configuration. If we go to Infrastructure > Routing > Static Routes we should see how three new routes has been created in the desired VRF to direct traffic towards the PodCIDR networks allocated to each node by the CNI. The backend IP address will be used as next-hop.

We will complete the AKO configuration for the second k8s cluster at a later stage since we will be focused on a single cluster for now. Once the reachability has been done, now it’s time to move into next level and start creating the k8s resources.