Creating first LoadBalancer Object

Once the reachability is done now it’s time to create our first kubernetes Services. AKO is a Kubernetes operator implemented as a Pod that will be watching the kubernetes service objects of type Load Balancer and Ingress and it will configure them accordingly in the Service Engine to serve the traffic. Let’s focus on the LoadBalancer service for now. A LoadBalancer is a common way in kubernetes to expose L4 services (non-http) to the external world.

Let’s create the first service using some kubectl imperative commands. First we will create a simple deployment using kuard which is a popular app used for testing and will use a container image as per the kubectl command below. After creating the deployment we can see k8s starting the pod creation process.

kubectl create deployment kuard --image=gcr.io/kuar-demo/kuard-amd64:1

deployment.apps/kuard created

kubectl get pods

NAME READY STATUS RESTARTS AGE

kuard-74684b58b8-hmxrs 1/1 Running 0 3sAs you can see the scheduler has decided to place the new created pod in the worker node site1-az1-k8s-worker02 and the IP 10.34.1.8 has been allocated.

kubectl describe pod kuard-74684b58b8-hmxrs

Name: kuard-74684b58b8-hmxrs

Namespace: default

Priority: 0

Node: site1-az1-k8s-worker02/10.10.24.162

Start Time: Thu, 03 Dec 2020 17:48:01 +0100

Labels: app=kuard

pod-template-hash=74684b58b8

Annotations: <none>

Status: Running

IP: 10.34.1.8

IPs:

IP: 10.34.1.8Remember this network is not routable from the outside unless we create a static route pointing to the node IP address as next-hop. This configuration task is done for us automatically by AKO as explained in previous article. If we want to expose externally our kuard deployment, we would create a LoadBalancer service. As usual, will use kubectl imperative commands to do so. In that case kuard listen on port 8080.

kubectl expose deployment kuard --port=8080 --type=LoadBalancerLet’s try to see what is happening under the hood debugging the AKO pod. The following events has been triggered by AKO as soon as we create the new LoadBalancer service. We can show them using kubectl logs ako-0 -n avi-system

kubectl logs ako-0 -n avi-system

# AKO detects the new k8s object an triggers the VS creation

2020-12-11T09:44:23.847Z INFO nodes/dequeue_ingestion.go:135 key: L4LBService/default/kuard, msg: service is of type loadbalancer. Will create dedicated VS nodes

# A set of attributes and configurations will be used for VS creation

# including Network Profile, ServiceEngineGroup, Name of the service ...

# naming will be derived from the cluster name set in values.yaml file

2020-12-11T09:44:23.847Z INFO nodes/avi_model_l4_translator.go:97 key: L4LBService/default/kuard, msg: created vs object: {"Name":"S1-AZ1--default-kuard","Tenant":"admin","ServiceEngineGroup":"S1-AZ1-SE-Group","ApplicationProfile":"System-L4-Application","NetworkProfile":"System-TCP-Proxy","PortProto":[{"PortMap":null,"Port":8080,"Protocol":"TCP","Hosts":null,"Secret":"","Passthrough":false,"Redirect":false,"EnableSSL":false,"Name":""}],"DefaultPool":"","EastWest":false,"CloudConfigCksum":0,"DefaultPoolGroup":"","HTTPChecksum":0,"SNIParent":false,"PoolGroupRefs":null,"PoolRefs":null,"TCPPoolGroupRefs":null,"HTTPDSrefs":null,"SniNodes":null,"PassthroughChildNodes":null,"SharedVS":false,"CACertRefs":null,"SSLKeyCertRefs":null,"HttpPolicyRefs":null,"VSVIPRefs":[{"Name":"S1-AZ1--default-kuard","Tenant":"admin","CloudConfigCksum":0,"FQDNs":["kuard.default.avi.iberia.local"],"EastWest":false,"VrfContext":"VRF_AZ1","SecurePassthoughNode":null,"InsecurePassthroughNode":null}],"L4PolicyRefs":null,"VHParentName":"","VHDomainNames":null,"TLSType":"","IsSNIChild":false,"ServiceMetadata":{"namespace_ingress_name":null,"ingress_name":"","namespace":"default","hostnames":["kuard.default.avi.iberia.local"],"svc_name":"kuard","crd_status":{"type":"","value":"","status":""},"pool_ratio":0,"passthrough_parent_ref":"","passthrough_child_ref":""},"VrfContext":"VRF_AZ1","WafPolicyRef":"","AppProfileRef":"","HttpPolicySetRefs":null,"SSLKeyCertAviRef":""}

# A new pool is created using the existing endpoints in K8s that represent # the deployment

2020-12-11T09:44:23.848Z INFO nodes/avi_model_l4_translator.go:124 key: L4LBService/default/kuard, msg: evaluated L4 pool values :{"Name":"S1-AZ1--default-kuard--8080","Tenant":"admin","CloudConfigCksum":0,"Port":8080,

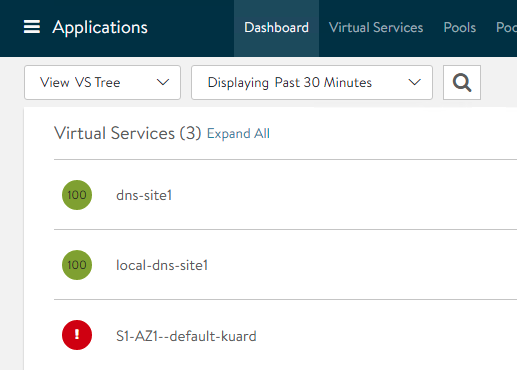

"TargetPort":0,"PortName":"","Servers":[{"Ip":{"addr":"10.34.1.8","type":"V4"},"ServerNode":"site1-az1-k8s-worker02"}],"Protocol":"TCP","LbAlgorithm":"","LbAlgorithmHash":"","LbAlgoHostHeader":"","IngressName":"","PriorityLabel":"","ServiceMetadata":{"namespace_ingress_name":null,"ingress_name":"","namespace":"","hostnames":null,"svc_name":"","crd_status":{"type":"","value":"","status":""},"pool_ratio":0,"passthrough_parent_ref":"","passthrough_child_ref":""},"SniEnabled":false,"SslProfileRef":"","PkiProfile":null,"VrfContext":"VRF_AZ1"}If we move to the Controller GUI we can notice how a new Virtual Service has been automatically provisioned

The reason of the red color is because the virtual service needs a Service Engine to perform its function in the DataPlane. If you hover the mouse over the Virtual Service object a notification is showed confirming that it is waiting to the SE to be deployed.

The AVI controller will ask the infrastructure cloud provider (vCenter in this case) to create this virtual machine automatically.

After a couple of minutes, the new Service Engine that belongs to our Service Engine Group is ready and has been plugged automatically in the required networks. In our example, because we are using a two-arm deployment, the SE would need a vnic interface to reach the backend network and also a fronted vnic interface to answer external ARP requests coming from the clients. Remember IPAM is one of the integrated services that AVI provides so the Controller will allocate all the needed IP addresses automatically on our behalf.

After some minutes, the VS turns intro green. We can expand the new VS to visualize the related object such as the VS, the server pool, the backend network and the k8s endpoints (pods) that will be used as members of the server pool. Also we can see the name of the SE in which the VS is currently running.

As you probably know, a deployment resource has an associated replicaset controller that is used, as its name implies, to control the number of individual replicas for a particular deployment. We can use kubectl commands to scale in/out our deployment just by changing the number or replicas. As you can guess our AKO needs to be aware of any changes in the deployment so this change should be reflected accordingly in the AVI Virtual Server realization at the Service Engines. Let’s scale-out our deployment.

kubectl scale deployment/kuard --replicas=5

deployment.apps/kuard scaledThis will create new pods that will act as endpoints for the same service. The new set of endpoints created become members of the server pool as part of the AVI Virtual Service object as it is showed below in the graphical representation

AVI as DNS Resolver for created objects

The DNS is another integrated service that AVI performs so, once the Virtual Service is ready it should register the name against the AVI DNS. If we go to Applications > Virtual Service > local-dns-site1 in the DNS Records tab we can see the new DNS record added automatically.

If we query the DNS asking for kuard.default.avi.iberia.local

dig kuard.default.avi.iberia.local @10.10.25.44 +noall +answer

kuard.default.avi.iberia.local. 5 IN A 10.10.25.43In the same way, if we scale-in the deployment to zero replicas using the same method described above, it should have also an effect in the Virtual Service. We can see how it turns again into red and how the pool has no members inasmuch as no k8s endpoints are available.

And hence if we query for the FQDN, we should receive a NXDOMAIN answer indicating that the server is unable to resolve that name. Note how a SOA response indicates that the DNS server you are querying is authoritative for this particular domain though.

dig nginx.default.avi.iberia.local @10.10.25.44

; <<>> DiG 9.16.1-Ubuntu <<>> kuard.default.avi.iberia.local @10.10.25.44

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NXDOMAIN, id: 59955

;; flags: qr aa rd; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;nginx.default.avi.iberia.local. IN A

;; AUTHORITY SECTION:

kuard.default.avi.iberia.local. 30 IN SOA site1-dns.iberia.local. [email protected]. 1 10800 3600 86400 30

;; Query time: 0 msec

;; SERVER: 10.10.25.44#53(10.10.25.44)

;; WHEN: Tue Nov 17 14:43:35 CET 2020

;; MSG SIZE rcvd: 138Let’s scale out again our deployment to have 2 replicas.

kubectl scale deployment/kuard --replicas=2

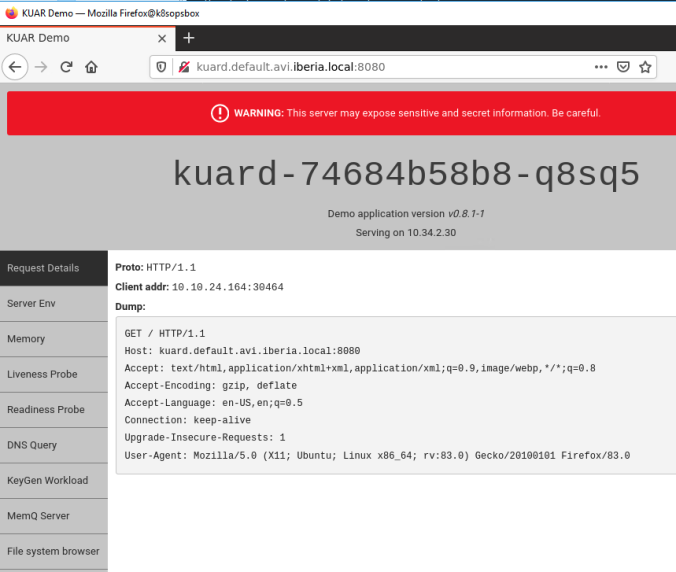

deployment.apps/kuard scaleLast, let’s verify if the L4 Load Balancing service is actually working. We can try to open the url in our preferred browser. Take into account your configured DNS should be able to forward DNS queries for default.avi.iberia.local DNS zone to success in name resolution. This can be achieved easily by configuring a Zone Delegation in your existing local DNS.

Exploring AVI Analytics

One of the most interesting features of using AVI as a LoadBalancer is the rich analytics the product provides. A simple way to generate synthetic traffic is using the locust tool written in python. You need python and pip3 to get locust running. You can find instructions about locust installation here. We can create a simple file to mimic user activity. In this case let’s simulate users browsing the “/” path. The contents of the locustfiel_kuard.py would be something like this.

import random

from locust import HttpUser, between, task

from locust.contrib.fasthttp import FastHttpUser

import resource

resource.setrlimit(resource.RLIMIT_NOFILE, (9999, 9999))

class QuickstartUser(HttpUser):

wait_time = between(5, 9)

@task(1)

def index_page(self):

self.client.get("/")We can now launch the locust app using bellow command line. This generate traffic for 100 seconds sending GET / requests to the URL http://10.10.25.43:8080. The tool will show some traffic statistics in the stdout.

locust -f locustfile_kuard.py --headless --logfile /var/local/locust.log -r 100 -u 200 --run-time 100m --host=http://10.10.25:43:8080In order to see the user activity logs we need to enable the Non-significant logs under the properties of the created S1-AZ1–default-kuard Virtual Service. You need also to set the Metric Update Frequency to Real Time Metrics and set to 0 mins to speed up the process of getting activity logs into the GUI.

After this, we can enjoy the powerful analytics provided by AVI SE.

For example, we can diagnose easily some common issues like retransmissions or timeouts for certain connections or some deviations in the end to end latency.

We can also see how the traffic is being distributed accross the different PODs. We can go to Log Analytics at the right of the screen and then if we click on Server IP Address, you get this traffic table showing traffic distribution.

And also how the traffic is evolving across the time.

Now that we have a clear picture of how AKO works for LoadBalancer type services, let’s move to the next level to explore the ingress type services.