We have been focused on a single k8s cluster deployment so far. Although a K8s cluster is a highly distributed architecture that improves the application availability by itself, sometimes an extra layer of protection is needed to overcome failures that might affect to all the infraestructure in a specific physical zone such as a power outage or a natural disaster in the failure domain of the whole cluster. A common method to achieve extra availability is by running our applications in independent clusters that are located in different the Availability Zones or even in different datacenters located in different cities, region, countries… etc.

The AMKO facilitates multi-cluster application deployment extending application ingress controllers across multi-region and multi Availability Zone deployments mapping the same application deployed on multiple clusters to a single GSLB service. AMKO calls Avi Controller via API to create GSLB services on the leader cluster which synchronizes with all follower clusters. The general diagram is represented here.

AMKO is an Avi pod running in the Kubernetes GSLB leader cluster and in conjunction with AKO, AMKO facilitates multicluster application deployment. We will use the following building blocks to extend our single site to create a testbed architecture that will help us to verify how AMKO actually works.

As you can see, the above picture represents a comprehensive georedundant architecture. I have deployed two clusters in the Left side (Site1) that will share the same AVI Controller and is split in two separate Availability Zones, let’s say Site1 AZ1 and Site1AZ2. The AMKO operator will be deployed in the Site1 AZ1 cluster. In the right side we have another cluster with a dedicated AVI Controller. On the top we have also created a “neutral” site with a dedicated controller that will act as the GSLB leader and will resolve the DNS queries from external clients that are trying to reach our exposed FQDNs. As you can tell, each of the kubernetes cluster has their own AKO component and will publish their external services in different FrontEnd subnets: Site1 AZ1 will publish the services at 10.10.25.0/24 network, Site1 AZ2 will publish the services using 10.10.26.0/24 network and finally Site2 will publish their services using the 10.10.23.0/24 network.

Deploy AKO in the remaining K8S Clusters

AMKO works in conjunction with AKO. Basically AKO will capture Ingress and LoadBalancer configuration ocurring at the K8S cluster and calls the AVI API to translate the observed configuration into an external LoadBalancer implementeation whereas AMKO will be in charge of capturing the interesting k8s objects and calling the AVI API to implement a GSLB services that will provide load-balancing and High-Availability across different k8s cluster. Having said that, before going into the configuration of the AVI GSLB we need to prepare the infrastructure and deploy AKO in all the remaining k8s clusters in the same way we did with the first one as explained in the previous articles. The configuration yaml files for each of the AKO installations can be found here for your reference.

The selected parameters for the Site1 AZ2 AKO and saved in site1az2_values.yaml file are shown below

| Parameter | Value | Description |

| AKOSettings.disableStaticRouteSync | false | Allow the AKO to create static routes to achieve POD network connectivity |

| AKOSettings.clusterName | S1-AZ2 | A descriptive name for the cluster. Controller will use this value to prefix related Virtual Service objects |

| NetworkSettings.subnetIP | 10.10.26.0 | Network in which create the Virtual Service Objects at AVI SE. Must be in the same VRF as the backend network used to reach k8s nodes. It must be configured with a static pool or DHCP to allocate IP address automatically. |

| NetworkSettings.subnetPrefix | 24 | Mask lenght associated to the subnetIP for Virtual Service Objects at SE. |

| NetworkSettings.vipNetworkList: – networkName | AVI_FRONTEND_3026 | Name of the AVI Network object hat will be used to place the Virtual Service objects at AVI SE. |

| L4Settings.defaultDomain | avi.iberia.local | This domain will be used to place the LoadBalancer service types in the AVI SEs. |

| ControllerSettings.serviceEngineGroupName | S1-AZ2-SE-Group | Name of the Service Engine Group that AVI Controller use to spin up the Service Engines |

| ControllerSettings.controllerVersion | 20.1.5 | Controller API version |

| ControllerSettings.controllerIP | 10.10.20.43 | IP Address of the AVI Controller. In this case is shared with the Site1 AZ1 k8 cluster |

| avicredentials.username | admin | Username to get access to the AVI Controller |

| avicredentials.password | password01 | Password to get access to the AVI Controller |

Similarly the selected values for the AKO at Side 2 will are listed below

| Parameter | Value | Description |

| AKOSettings.disableStaticRouteSync | false | Allow the AKO to create static routes to achieve POD network connectivity |

| AKOSettings.clusterName | S2 | A descriptive name for the cluster. Controller will use this value to prefix related Virtual Service objects |

| NetworkSettings.subnetIP | 10.10.23.0 | Network in which create the Virtual Service Objects at AVI SE. Must be in the same VRF as the backend network used to reach k8s nodes. It must be configured with a static pool or DHCP to allocate IP address automatically. |

| NetworkSettings.subnetPrefix | 24 | Mask associated to the subnetIP for Virtual Service Objects at SE. |

NetworkSettings.vipNetworkList: – networkName | AVI_FRONTEND_3023 | Name of the AVI Network object hat will be used to place the Virtual Service objects at AVI SE. |

| L4Settings.defaultDomain | avi.iberia.local | This domain will be used to place the LoadBalancer service types in the AVI SEs. |

| ControllerSettings.serviceEngineGroupName | S2-SE-Group | Name of the Service Engine Group that AVI Controller use to spin up the Service Engines |

| ControllerSettings.controllerVersion | 20.1.5 | Controller API version |

| ControllerSettings.controllerIP | 10.10.20.44 | IP Address of the AVI Controller. In this case is shared with the Site1 AZ1 k8 cluster |

| avicredentials.username | admin | Username to get access to the AVI Controller |

| avicredentials.password | password01 | Password to get access to the AVI Controller |

As a reminder, each cluster is made up by single master and two worker nodes and we will use Antrea as CNI. To deploy Antrea we need to assign a CIDR block to allocate IP address for POD networking needs. The following table list the allocated CIDR per cluster.

| Cluster Name | POD CIDR Block | CNI | # Master | # Workers |

| Site1-AZ1 | 10.34.0.0/18 | Antrea | 1 | 2 |

| Site1-AZ2 | 10.34.64.0/18 | Antrea | 1 | 2 |

| Site2 | 10.34.128.0/18 | Antrea | 1 | 2 |

GSLB Leader base configuration

Now that AKO is deployed in all the clusters, let’s start with the GSLB Configuration. Before launching the GSLB Configuration, we need to create some base configuration at the AVI controller located at the top of the diagram shown at the beggining in order to prepare it to receive the dynamically created GLSB services. GSLB is a very powerful feature included in the AVI Load Balancer. A comprehensive explanation around GSLB can be found here. Note that in proposed architecture we will define the AVI controller located at a neutral site as an the Leader Active Site, meaning this site will be responsible totally or partially for the following key functions:

- Definition and ongoing synchronization/maintenance of the GSLB configuration

- Monitoring the health of configuration components

- Optimizing application service for clients by providing GSLB DNS responses to their FQDN requests based on the GSLB algorithm configured

- Processing of application requests

To create the base config at the Leader site we need to do some steps. GSLB will act at the end of the day as an “intelligent” DNS responder. That means we need to create a Virtual Service at the Data Plane (e.g. at the Service Engines) to answer the DNS queries coming from external clients. To do so, the very first step is to define a Service Engine Group and a DNS Virtual Service. Log into the GSLB Leader AVI Controller GUI and create the Service Engine Group. As shown in previous articles after Controller installation you need to create the Cloud (vcenter in our case) and then select the networks and the IP ranges that will be used for the Service Engine Placement. The intended diagram is represented below. The DNS service will pick up an IP address of the subnet 10.10.24.0 to create the DNS service.

As explained in previous articles we need to create the Service Engine Group, and then create a new Virtual Service using Advanced Setup. After this task are completed the AVI controller will spin up a new Service Engine and place it in the corresponding networks. If everything worked well after a couple of minutes we should have a green DNS application in the AVI dashboard like this:

Some details of the created DNS virtual service can be displayed hovering on the Virtual Service g-dns object. Note the assigned IP Address is 10.10.24.186. This is the IP that actually will respond to DNS queries. The service port is, in this case 53 that is the well-known port for DNS.

DNS Zone Delegation

In a typical enterprise setup, a user has a Local pair of DNS configured that will receive the DNS queries and will be in charge of mainitiing the local domain DNS records and will also forward the requests for those domains that cannot be resolved locally (tipically to the DNS of the internet provider).

The DNS gives you the option to separate the namespace of the local domains into different DNS zones using an special configuration called Zone Delegation. This setup is useful when you want to delegate the management of part of your DNS namespace to another location. In our case particular case AVI will be in charge for DNS resolution of the Virtual Services that we are being exposed to Internet by means of AKO. The local DNS will be in charge of the local domain iberia.local and a Zone Delegation will instruct the local DNS to forward the DNS queries for the authoritative DNS servers of the new zone.

In our case we will create a delegated Zone for the local subdomain avi.iberia.local. All the name resolution queries for that particular DNS namespace will be sent to the AVI DNS virtual service. I am using Windows Server DNS here show I will show you how to configure a Zone Delegation using this especific DNS implementation. There are equivalent process for doing this using Bind or other popular DNS software.

The first step is to create a regular DNS A record in the local zone that will point to the IP of the Virtual Server that is actually serving the DNS in AVI. In our case we defined a DNS Virtual Service called g-dns and the allocated IP Address was 10.10.24.186. Just add an New A Record as shown below

Now, create a New Delegation. Click on the local domain, right click and select the New Delegation option.

A wizard is launched to assist you in the configuration process.

Specify the name for the delegated domain. In this case we are using avi. This will create a delegation for the avi.iberia.local subdomain.

Next step is to specify the server that will serve the request for this new zone. In this case we will use the g-dns.iberia.local fqdn that we created previously and that resolves to the IP address of the AVI DNS Virtual Service.

If you enter the information by clicking resolve you can tell how an error appears indicating that the target server is not Authoritative for this domain.

If we look into the AVI logs you can find that the virtual service has received a new special query called SOA (Start of Authority) that is used to verify if there the DNS service is Authoritative for a particular domain. AVI answer with a NXDOMAIN which means it is not configured to act as Authoritative server for avi.iberia.local.

If you want AVI to be Authoritative for a particular domain just edit the DNS Virtual service and click on pencil at the right of the Application Profile > System-DNS menu.

In the Domain Names/Subdomains section add the Domain Name. The configured domain name will be authoritativley serviced by our DNS Virtual Service. For this domain, AVI will send SOA parameters in the answer section of response when a SOA type query is received.

Once done, you can query the DNS Virtual server using the domain and you will receive a proper SOA response from the server.

dig avi.iberia.local @10.10.24.186

; <<>> DiG 9.16.1-Ubuntu <<>> avi.iberia.local @10.10.24.186

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NXDOMAIN, id: 10856

;; flags: qr aa rd; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;avi.iberia.local. IN A

;; AUTHORITY SECTION:

avi.iberia.local. 30 IN SOA g-dns.iberia.local. johernandez\@iberia.local. 1 10800 3600 86400 30

;; Query time: 4 msec

;; SERVER: 10.10.24.186#53(10.10.24.186)

;; WHEN: Sat Dec 19 09:41:43 CET 2020

;; MSG SIZE rcvd: 123In the same way, you can see how the Windows DNS Service validates now the server information because as shown above is responding to the SOA query type indicationg that way it is authoritative for the intended avi.iberia.local delegated domain.

If we explore into the logs now we can see how our AVI DNS Virtual Service is now sending a NOERROR message when a SOA query for the domain avi.iberia.local is received. This is an indication for the upstream DNS server this is a legitimate server to forward queries when someone tries to resolve a fqdn that belongs to the delegated domain. Although using SOA is a kind of best practique, the MSFT DNS server will send queries directed to the delegated domain towards the downstream configured DNS servers even if it is not getting a SOA response for that particular delegated domain.

As you can see, the Zone delegation process simply consists in creating an special Name Server (NS) type record that point to our DNS Virtual Server when a DNS for avi.iberia.local is received.

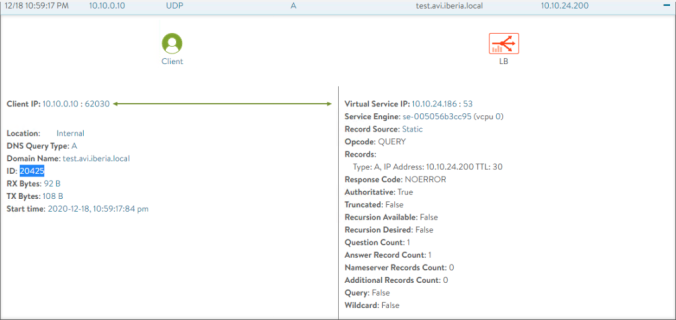

To test the delegation we can create a dummy record. Edit the DNS Virtual Service clicking the pencil icon and go to Static DNS Records tab. Then create a new DNS record such as test.avi.iberia.local and set an IP address of your choice. In this case 10.10.24.200.

In case you need extra debugging and go deeper in how the Local DNS server is actually handling the DNS queries you can always enable debugging at the MSFT DNS. Open the DNS application from Windows Server click on your server and go to Action > Properties and then click on the Debug Logging tab. Select Log packets for debugging. Specify also a File Path and Name in the Log File Section at the bottom.

Now it’s time to test how everything works together. Using dig tool from a local client configured to use the local DNS servers in which we have created the Zone Delegation try to resolve test.avi.iberia.local FQDN.

dig test.avi.iberia.local

; <<>> DiG 9.16.1-Ubuntu <<>> test.avi.iberia.local

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 20425

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 65494

;; QUESTION SECTION:

;test.avi.iberia.local. IN A

;; ANSWER SECTION:

test.avi.iberia.local. 29 IN A 10.10.24.200

;; Query time: 7 msec

;; SERVER: 127.0.0.53#53(127.0.0.53)

;; WHEN: Fri Dec 18 22:59:17 CET 2020

;; MSG SIZE rcvd: 66Open the log file you have defined for debugging in Windows DNS Server and look for the interenting query (you can use your favourite editor and search for some strings to locate the logs). The following recursive events shown in the log corresponds to the expected behaviour for a Zone Delegation.

# Query Received from client for test.avi.iberia.local

18/12/2020 22:59:17 1318 PACKET 000002DFAB069D40 UDP Rcv 192.168.145.5 d582 Q [0001 D NOERROR] A (4)test(3)avi(6)iberia(5)local(0)

# Query sent to the NS for avi.iberia.local zone at 10.10.24.186

18/12/2020 22:59:17 1318 PACKET 000002DFAA1BB560 UDP Snd 10.10.24.186 4fc9 Q [0000 NOERROR] A (4)test(3)avi(6)iberia(5)local(0)

# Answer received from 10.20.24.186 which is the g-dns Virtual Service

18/12/2020 22:59:17 1318 PACKET 000002DFAA052170 UDP Rcv 10.10.24.186 4fc9 R Q [0084 A NOERROR] A (4)test(3)avi(6)iberia(5)local(0)

# Response sent to the originating client

18/12/2020 22:59:17 1318 PACKET 000002DFAB069D40 UDP Snd 192.168.145.5 d582 R Q [8081 DR NOERROR] A (4)test(3)avi(6)iberia(5)local(0)

Note how the ID of the AVI DNS response is 20425 as shown below and corresponds to 4fc9 in hexadecimal as shown in the log trace of the MS DNS Server above.

GSLB Leader Configuration

Now that the DNS Zone delegation is done, let’s move to the GSLB AVI controller again to create the GSLB Configuration. If we go to Infrastructure and GSLB note how the GSLB status is set to Off.

Click on the Pencil Icon to turn the service on and populate the fields above as in the example below. You need to specify a GSLB Subdomain that matches with the inteded DNS zone you will create the virtual services in this case avi.iberia.local. Then click Save and Set DNS Virtual Services.

Now select the DNS Virtual Service we created before and pick-up the subdomains in which we are going to create the GSLB Services from AMKO.

Save the config and you will get this screen indicating the service the GSLB service for the avi.iberia.local subdomain is up and running.

AMKO Installation

The installation of AMKO is quite similar to the AKO installation. It’s important to note that AMKO assumes it has connectivity to all the k8s Master API server across the deployment. That means all the configs and status across the different k8s clusters will be monitored from a single AMKO that will reside, in our case in the Site1 AZ1 k8s cluster. As in the case of AKO we will run the AMKO pod in a dedicated namespace. We also will a namespace called avi-system for this purpose. Ensure the namespace is created before deploying AMKO, otherwise use kubectl to create it.

kubectl create namespace avi-systemAs you may know if you are familiar with k8s, to get access to the API of the K8s cluster we need a kubeconfig file that contains connection information as well as the credentials needed to authenticate our sessions. The default configuration file is located at ~/.kube/config folder of the master node and is referred to as the kubeconfig file. In this case we will need a kubeconfig file containing multi-cluster access. There is a tutorial on how to create the kubeconfig file for multicluster access in the official AMKO github located at this site.

The contents of my kubeconfig file will look like this. You can easily identify different sections such as clusters, contexts and users.

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: <ca-data.crt>

server: https://10.10.24.160:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: <client-data.crt>

client-key-data: <client-key.key>Using the information above and combining the information extracted from the three individual kubeconfig files we can create a customized multi-cluster config file. Replace certificates and keys with your specific kubeconfig files information and also choose representative names for the contexts and users. A sample version of my multicluster kubeconfig file can be accessed here for your reference.

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: <Site1-AZ1-ca.cert>

server: https://10.10.24.160:6443

name: Site1AZ1

- cluster:

certificate-authority-data: <Site1-AZ2-ca.cert>

server: https://10.10.23.170:6443

name: Site1AZ2

- cluster:

certificate-authority-data: <Site2-ca.cert>

server: https://10.10.24.190:6443

name: Site2

contexts:

- context:

cluster: Site1AZ1

user: s1az1-admin

name: s1az1

- context:

cluster: Site1AZ2

user: s1az2-admin

name: s1az2

- context:

cluster: Site2

user: s2-admin

name: s2

kind: Config

preferences: {}

users:

- name: s1az1-admin

user:

client-certificate-data: <s1az1-client.cert>

client-key-data: <s1az1-client.key>

- name: s1az2-admin

user:

client-certificate-data: <s1az2-client.cert>

client-key-data: <s1az1-client.key>

- name: s2-admin

user:

client-certificate-data: <site2-client.cert>

client-key-data: <s1az1-client.key>

Save the multiconfig file as gslb-members. To verify there is no sintax problems in our file and providing there is connectivity to the API server of each cluster we can try to read the created file using kubectl as shown below.

kubectl --kubeconfig gslb-members config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

s1az1 Site1AZ1 s1az1-admin

s1az2 Site1AZ2 s1az2-admin

s2 Site2 s2-adminIt very common and also useful to manage the three clusters from a single operations server and change among different contexts to operate each of the clusters centrally. To do so just place this newly multi kubeconfig file in the default path that kubectl will look for the kubeconfig file which is $HOME/.kube/config. Once done you can easily change between contexts just by using kubectl and use-context keyword. In the example below we are switching to context s2 and then note how kubectl get nodes list the nodes in the cluster at Site2.

kubectl config use-context s2

Switched to context "s2".

kubectl get nodes

NAME STATUS ROLES AGE VERSION

site2-k8s-master01 Ready master 60d v1.18.10

site2-k8s-worker01 Ready <none> 60d v1.18.10

site2-k8s-worker02 Ready <none> 60d v1.18.10Switch now to the target cluster in which the AMKO is going to be installed. In our case the context for accessing that cluster is s1az1 that corresponds to the cluster located as Site1 and in the Availability Zone1. Once switches, we will generate a k8s generic secret object that we will name gslb-config-secret that will be used by AMKO to get acccess to the three clusters in order to watch for the required k8s LoadBalancer and Ingress service type objects.

kubectl config use-context s1az1

Switched to context "s1az1".

kubectl create secret generic gslb-config-secret --from-file gslb-members -n avi-system

secret/gslb-config-secret createdNow it’s time to install AMKO. First you have to add a new repo that points to the url in which AMKO helm chart is published.

helm repo add amko https://avinetworks.github.io/avi-helm-charts/charts/stable/amkoIf we search in the repository we can see the last version available, in this case 1.2.1

helm search repo

NAME CHART VERSION APP VERSION DESCRIPTION

amko/amko 1.4.1 1.4.1 A helm chart for Avi Multicluster Kubernetes OperatorThe AMKO base config is created using a yaml file that contains the required configuration items. To get a sample file with default

helm show values ako/amko --version 1.4.1 > values_amko.yamlNow edit the values_amko.yaml file that will be the configuration base of our Multicluster operator. The following table shows some of the specific values for AMKO.

| Parameter | Value | Description |

| configs.controllerVersion | 20.1.5 | Release Version of the AVI Controller |

| configs.gslbLeaderController | 10.10.20.42 | IP Address of the AVI Controller that will act as GSLB Leader |

| configs.memberClusters | clusterContext: “s1az1” “s1az2” “s2” | Specifiy the contexts used in the gslb-members file to reach the K8S API in the differents k8s clusters |

| gslbLeaderCredentials.username | admin | Username to get access to the AVI Controller API |

| gslbLeaderCredentials.password | password01 | Password to get access to the AVI Controller API |

| gdpConfig.appSelector.label | app: gslb | All the services that contains a label field that matches with app:gslb will be considered by AMKO. A namespace selector can also be used for this purpose |

| gdpConfig.matchClusters | s1az1 s1az2 s1az2 | Name of the Service Engine Group that AVI Controller use to spin up the Service Engines |

| gdpConfig.trafficSplit | traffic split ratio (see yaml file below for sintax) | Define how DNS answers are distributed across clusters. |

The full amko_values.yaml I am using as part of this lab is shown below and can also be found here for your reference. Remember to use the same contexts names as especified in the gslb-members multicluster kubeconfig file we used to create the secret object otherwise it will not work.

# Default values for amko.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

replicaCount: 1

image:

repository: avinetworks/amko

pullPolicy: IfNotPresent

configs:

gslbLeaderController: "10.10.20.42"

controllerVersion: "20.1.2"

memberClusters:

- clusterContext: "s1az1"

- clusterContext: "s1az2"

- clusterContext: "s2"

refreshInterval: 1800

logLevel: "INFO"

gslbLeaderCredentials:

username: admin

password: Password01

globalDeploymentPolicy:

# appSelector takes the form of:

appSelector:

label:

app: gslb

# namespaceSelector takes the form of:

# namespaceSelector:

# label:

# ns: gslb

# list of all clusters that the GDP object will be applied to, can take

# any/all values

# from .configs.memberClusters

matchClusters:

- "s1az1"

- "s1az1"

- "s2"

# list of all clusters and their traffic weights, if unspecified,

# default weights will be

# given (optional). Uncomment below to add the required trafficSplit.

trafficSplit:

- cluster: "s1az1"

weight: 6

- cluster: "s1az2"

weight: 4

- cluster: "s2"

weight: 2

serviceAccount:

# Specifies whether a service account should be created

create: true

# Annotations to add to the service account

annotations: {}

# The name of the service account to use.

# If not set and create is true, a name is generated using the fullname template

name:

resources:

limits:

cpu: 250m

memory: 300Mi

requests:

cpu: 100m

memory: 200Mi

service:

type: ClusterIP

port: 80

persistentVolumeClaim: ""

mountPath: "/log"

logFile: "amko.log"After customizing the values.yaml file we can now install the AMKO through helm.

helm install amko/amko --generate-name --version 1.4.1 -f values_amko.yaml --namespace avi-systemThe installation creates a AMKO Pod and also a GSLBConfig and a GlobalDeploymentPolicy CRDs objects that will contain the configuration. It is important to note that any change to the GlobalDeploymentPolicy object is handled at runtime and does not require a full restart of AMKO pod. As an example, you can change on the fly how the traffic is split across diferent cluster just by editing the correspoding object.

Let’s use Octant to explore the new objects created by AMKO installation. First, we need to change the namespace since all the related object has been created within the avi-system namespace. At the top of the screen switch to avi-system.

If we go to Workloads, we can easily identify the pods at avi-system namespace. In this case apart from ako-0 which is also running in this cluster, it appears amko-0 as you can see in the below screen.

Browse to Custom Resources and you can identify the two Custom Resource Definition that AMKO installation has created. The first one is globaldeploymentpolicies.amko.vmware.com and there is an object called global-gdp. This one is the object that is used at runtime to change some policies that dictates how AMKO will behave such as the labels we are using to select the interesing k8s services and also the load balancing split ratio among the different clusters. At the moment of writing the only available algorithm to split traffic across cluster using AMKO is a weighted round robin but other methods such as GeoRedundancy are currently roadmapped and will be available soon.

In the second CRD called gslbconfigs.amko.vmware.com we can find an object named gc-1 that displays the base configuration of the AMKO service. The only parameter we can change at runtime without restarting AMKO is the log level.

Alternatively, if you prefer command line you can always edit the CRD object through regular kubectl edit commands like shown below

kubectl edit globaldeploymentpolicies.amko.vmware.com global-gdp -n avi-systemCreating Multicluster K8s Ingress Service

Before adding extra complexity to the GSLB architecture let’s try to create our first multicluster Ingress Service. For this purpose I will use another kubernetes application called hello-kubernetes whose declarative yaml file is posted here. The application presents a simple web interface and it will use the MESSAGE environment variable in the yaml file definition to specify a message that will appear in the http response. This will be very helpful to identify which server is actually serving the content at any given time.

The full yaml file shown below defines the Deployment, the Service and the Ingress.

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello

spec:

replicas: 3

selector:

matchLabels:

app: hello

template:

metadata:

labels:

app: hello

spec:

containers:

- name: hello-kubernetes

image: paulbouwer/hello-kubernetes:1.7

ports:

- containerPort: 8080

env:

- name: MESSAGE

value: "MESSAGE: This service resides in Site1 AZ1"

---

apiVersion: v1

kind: Service

metadata:

name: hello

spec:

type: ClusterIP

ports:

- port: 80

targetPort: 8080

selector:

app: hello

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: hello

labels:

app: gslb

spec:

rules:

- host: hello.avi.iberia.local

http:

paths:

- path: /

backend:

serviceName: hello

servicePort: 80

Note we are passing a MESSAGE variable to the container that will be used the display the text “MESSAGE: This service resides in SITE1 AZ1”. Note also in the metadata configuration of the Ingress section we have defined a label with the value app:gslb. This setting will be used by AMKO to select that ingress service and create the corresponding GSLB configuration at the AVI controller.

Let’s apply the hello.yaml file with the contents shown above using kubectl

kubectl apply -f hello.yaml

service/hello created

deployment.apps/hello created

ingress.networking.k8s.io/hello createdWe can inspect the events at the AMKO pod to understand the dialogue with the AVI Controller API.

kubectl logs -f amko-0 -n avi-system

# A new ingress object has been detected with the appSelector set

2020-12-18T19:00:55.907Z INFO k8sobjects/ingress_object.go:295 objType: ingress, cluster: s1az1, namespace: default, name: hello/hello.avi.iberia.local, msg: accepted because of appSelector

2020-12-18T19:00:55.907Z INFO ingestion/event_handlers.go:383 cluster: s1az1, ns: default, objType: INGRESS, op: ADD, objName: hello/hello.avi.iberia.local, msg: added ADD/INGRESS/s1az1/default/hello/hello.avi.iberia.local key

# A Health-Monitor object to monitor the health state of the VS behind this GSLB service

2020-12-18T19:00:55.917Z INFO rest/dq_nodes.go:861 key: admin/hello.avi.iberia.local, hmKey: {admin amko--http--hello.avi.iberia.local--/}, msg: HM cache object not found

2020-12-18T19:00:55.917Z INFO rest/dq_nodes.go:648 key: admin/hello.avi.iberia.local, gsName: hello.avi.iberia.local, msg: creating rest operation for health monitor

2020-12-18T19:00:55.917Z INFO rest/dq_nodes.go:425 key: admin/hello.avi.iberia.local, queue: 0, msg: processing in rest queue

2020-12-18T19:00:56.053Z INFO rest/dq_nodes.go:446 key: admin/hello.avi.iberia.local, msg: rest call executed successfully, will update cache

2020-12-18T19:00:56.053Z INFO rest/dq_nodes.go:1085 key: admin/hello.avi.iberia.local, cacheKey: {admin amko--http--hello.avi.iberia.local--/}, value: {"Tenant":"admin","Name":"amko--http--hello.avi.iberia.local--/","Port":80,"UUID":"healthmonitor-5f4cc076-90b8-4c2e-934b-70569b2beef6","Type":"HEALTH_MONITOR_HTTP","CloudConfigCksum":1486969411}, msg: added HM to the cache

# A new GSLB object named hello.avi.iberia.local is created. The associated IP address for resolution is 10.10.25.46. The weight for the Weighted Round Robin traffic distribution is also set. A Health-Monitor named amko--http--hello.avi.iberia.local is also attached for health-monitoring

2020-12-18T19:00:56.223Z INFO rest/dq_nodes.go:1161 key: admin/hello.avi.iberia.local, cacheKey: {admin hello.avi.iberia.local}, value: {"Name":"hello.avi.iberia.local","Tenant":"admin","Uuid":"gslbservice-7dce3706-241d-4f87-86a6-7328caf648aa","Members":[{"IPAddr":"10.10.25.46","Weight":6}],"K8sObjects":["INGRESS/s1az1/default/hello/hello.avi.iberia.local"],"HealthMonitorNames":["amko--http--hello.avi.iberia.local--/"],"CloudConfigCksum":3431034976}, msg: added GS to the cacheIf we go to the AVI Controller acting as GSLB Leader, from Applications > GSLB Services

Click in the pencil icon to explore the configuration AMKO has created upon creation of the Ingress Service. An application named hello.avi.iberia.local with a Health-Monitor has been created as shown below:

Scrolling down you will find a new GSLB pool has been defined as well.

Click on the pencil icon to see another properties

Finally, you get the IPv4 entry that the GSLB service will use to answer external queries. This IP address was obtained from the Ingress service external IP Address property at the source site that, by the way, was allocated by the integrated IPAM in the AVI Controller in that site.

If you go to the Dashboard, from Applications > Dashboard > View GSLB Services. You can see a representation of the GSLB object hello.avi.iberia.local that has a GSLB pool called hello.avi.iberia.local-10 that at the same time has a Pool Member entry with the IP address 10.10.25.46 that corresponds to the allocated IP for our hello-kubernetes service.

If you open a browser and go to http://hello.avi.iberia.local you can see how you can get the content of the hello-kubernetes application. Note how the message environment variable we pass is appearing as part of the content the web server is sending us. In that case the message indicates that the service we are accessing to resides in SITE1 AZ1.

Now it’s time to create the corresponding services in the other remaining clusters to convert the single site application into a multi-AZ, multi-region application. Just change context using kubectl and now apply the yaml file changing the MESSAGE variable to “MESSAGE: This services resides in SITE1 AZ2” for hello-kubernetes app at Site1 AZ2.

kubectl config use-context s1az2

Switched to context "s1az2".

kubectl apply -f hello_s1az2.yaml

service/hello created

deployment.apps/hello created

ingress.networking.k8s.io/hello createdAnd similarly do the same for site2 using now “MESSAGE: This service resides in SITE2” for the same application at Site2. The configuration files for the hello.yaml files of each cluster can be found here.

kubectl config use-context s2

Switched to context "s2".

kubectl apply -f hello_s2.yaml

service/hello created

deployment.apps/hello created

ingress.networking.k8s.io/hello createdWhen done you can go to the GSLB Service and verify there are new entries in the GSLB Pool. It can take some tome to declare the system up and show it in green while the health-monitor is checking for the availability of the application just created.

After some seconds the three new systems should show a comforting green color as an indication of the current state.

If you explore the configuration of the new created service you can see the assigned IP address for the new Pool members as well as the Ratio that has been configuring according to the AMKO trafficSplit parameter. For the Site1 AZ2 the assigned IP address is 10.10.26.40 and the ratio has been set to 4 as declared in the AMKO policy.

In the same way, for the Site 2 the assigned IP address is 10.10.23.40 and the ratio has been set to 2 as dictated by AMKO.

If you go to Dashboard and display the GSLB Service, you can get a global view of the GSLB Pool and its members

Testing GSLB Service

Now its time to test the GSLB Service, if you open a browser and refresh periodically you can see how the MESSAGE is changing indicating we are reaching the content at different sites thanks to the load balancing algorithm implemented in the GSLB service. For example, at some point you would see this message that means we are reaching the HTTP service at Site1 AZ2.

And also this message indicating the service is being served from SITE2.

An smarter way to verify the proper behavior check how the system is creating a simple script that do the “refresh” task for us on a programatic way in order to analyze how the system is answering our external DNS requests. Before starting we need to change the TTL for our service to accelerate the local DNS cache expiration. This is useful for testing purposes but is not a good practique for a production environment. In this case we will configure the GSLB service hello.avi.iberia.local to serve the DNS answers with a TTL equal to 2 seconds.

Let’s create a single line infinite loop using shell scripting to send a curl request with an interval of two seconds to the inteded URL at http://hello.avi.iberia.local. We will grep the MESSAGE string to display the line of the HTTP response to figure out which of the three sites are actually serving the content. Remember we are using here a Weighted Round Robin algorithm to achieve load balance, this is the reason why the frequency of the different messages are not the same as you can perceive below.

while true; do curl -m 2 http://hello.avi.iberia.local -s | grep MESSAGE; sleep 2; done

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ2

MESSAGE: This service resides in SITE1 AZ2

MESSAGE: This service resides in SITE2

MESSAGE: This Service resides in SITE2

MESSAGE: This service resides in SITE1 AZ2

MESSAGE: This service resides in SITE1 AZ2

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ2

MESSAGE: This service resides in SITE1 AZ2

MESSAGE: This service resides in SITE2

MESSAGE: This service resides in SITE2

MESSAGE: This service resides in SITE1 AZ2

MESSAGE: This service resides in SITE1 AZ2

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ1

MESSAGE: This service resides in SITE1 AZ2

MESSAGE: This service resides in SITE1 AZ2

MESSAGE: This service resides in SITE2

If we go to the AVI Log of the related DNS Virtual Service we can see how the sequence of responses are following the Weighted Round Robin algorithm as well.

Additionally, I have created an script here that helps you create the infinite loop and shows some well formatted and coloured information about DNS resolution and HTTP response that can be very helpful for testing and demo. This works with hello-kubernetes application but you can easily modify to fit your needs. The script needs the URL and the interval of the loop as input parameters.

./check_dns.sh hello.avi.iberia.local 2A sample output is shown below for your reference.

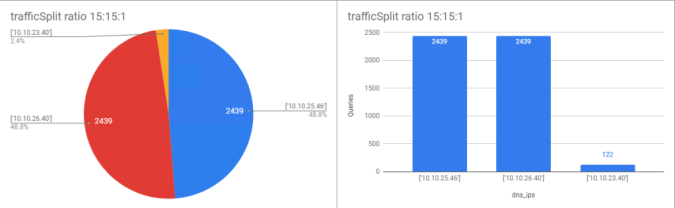

Exporting AVI Logs for data visualization and trafficSplit analysis

As you have already noticed during this series of articles NSX Advanced Load Balancer stands out for its rich embedded Analytics engine that help you to visualize all the activity in your application. There are yet sometimes when you prefer to export the data for further analysis using a Bussiness Intelligence tool of your choice. As an example I will show you a very simple way to verify the traffic split distribution across the three datacenter exporting the raw logs. That way we will check if traffic really fits with the configured ratio we have defined for load balancing (6:3:1 in this case). I will export the logs and analyze

Remember the trafficSplit setting can be changed at runtime just by editing the YAML file associated with the global-gdp object AMKO created. Using octant we can easily browse to the avi-system namespace and then go to Custom Resource > globaldeploymentpolicies.amko.vmware.com, click on global-gdp object and click on YAML tab. From here modify the assigned weight for each cluster as per your preference, click UPDATE and you are done.

Whenever you change this setting AMKO will refresh the configuration of the whole GSLB object to reflect the new changes. This produce a rewrite of the TTL value to the default setting of 30 seconds. If you want to repeat the test to verify this new trafficsplit distribution ensure you change the TTL to a lower value such as 1 second to speed up the expiration of the TTL cache.

The best tool to send DNS traffic is dnsperf and is available here. This performance tool for DNS read input files describing DNS queries, and send those queries to DNS servers to measure performance. In our case we just have one GSLB Service so far so the queryfile.txt contains a single line with the FQDN under test and the type of query. In this case we will send type A queries. The contents of the file is shown below

cat queryfile.txt

hello.avi.iberia.local. AWe will start by sending 10000 queries to our DNS. In this case we will send the queries to the DNS IP (-d option) of the virtual service under testing to make sure we are measuring the performance of the AVI DNS an not the parent domain DNS that is delegating the DNS Zone. To specify the number of queries use the -n option that instructs dnsperf tool to iterate over the same file the desired number of times. When the test finished it will display the observed performance metrics.

dnsperf -d queryfile.txt -s 10.10.24.186 -n 10000

DNS Performance Testing Tool

Version 2.3.4

[Status] Command line: dnsperf -d queryfile.txt -s 10.10.24.186 -n 10000

[Status] Sending queries (to 10.10.24.186)

[Status] Started at: Wed Dec 23 19:40:32 2020

[Status] Stopping after 10000 runs through file

[Timeout] Query timed out: msg id 0

[Timeout] Query timed out: msg id 1

[Timeout] Query timed out: msg id 2

[Timeout] Query timed out: msg id 3

[Timeout] Query timed out: msg id 4

[Timeout] Query timed out: msg id 5

[Timeout] Query timed out: msg id 6

[Status] Testing complete (end of file)

Statistics:

Queries sent: 10000

Queries completed: 9994 (99.94%)

Queries lost: 12 (0.06%)

Response codes: NOERROR 9994 (100.00%)

Average packet size: request 40, response 56

Run time (s): 0.344853

Queries per second: 28963.065422

Average Latency (s): 0.001997 (min 0.000441, max 0.006998)

Latency StdDev (s): 0.001117From the data below you can see how the performance figures are pretty good. With a single vCPU the Service Engine has responded 10.000 queries at a rate of almost 30.000 queries per second. When the dnsperf test is completed, go to the Logs section of the DNS VS to check how the logs are showed up in the GUI.

As you can see, only a very small fraction of the expected logs are showed in the console. The reason for this is because the collection of client logs is throttled at the Service Engines. Throttling is just a rate-limiting mechanism to save resources and is implemented as number of logs collected per second. Any excess logs in a second are dropped.

Throttling is controlled by two sets of properties: (i) throttles specified in the analytics policy of a virtual service, and (ii) throttles specified in the Service Engine group of a Service Engine. Each set has a throttle property for each type of client log. A client log of a specific type could be dropped because of throttles for that type in either of the above two sets.

You can modify the Log Throttling at the virtual service level by editing Virtual Service:g-dns and click on the Analytics tab. In the Client Log Settings disable log-throttling for the Non-significant Logs setting the value to zero as shown below:

In the same way you can modify Log Throttling settings at the Service Engine Group level also. Edit the Service Engine Group associated to the DNS Service. Click on the Advanced tab and go to the Log Collection and Streaming Settings. Set the Non-significatn Log Throttle to 0 Logs/Seconds which means no throttle is applied.

Be careful applying this settings for a production environment!! Repeat the test and now exploring the logs. Hover the mouse on the bar that is showing traffic and notice how the system is now getting all the logs to the AVI Analytics console.

Let’s do some data analysis to verify if the configured splitRatio is actually working as expected. We will use dnsperf but now we will rate-limit the number of queries using the -Q option. We will send 100 queries per second and for 5000 queries overall.

dnsperf -d queryfile.txt -s 10.10.24.186 -n 5000 -Q 100This time we can use the log exportation capabilities of the AVI Controller. Select the period of logs you want to export, click on Export button to get All 5000 logs

Now you have a CSV file containing all the analytics data within scope. There are many options to process the file. I am showing here a rudimentary way using Google Sheets application. If you have a gmail account just point your browser to https://docs.google.com/spreadsheets. Now create a blank spreadsheet and go to File > Import as shown below.

Click the Upload tab and browse for the CSV file you have just downloaded. Once the uploaded process has completed Import the Data contained in the file using the default options as shown below.

Google Sheets automatically organize our CSV separated values into columns producing a quite big spreadsheet with all the data as shown below.

Locate the column dns_ips that contains the responses DNS Virtual Service is sending when queried for the dns_fqdn hello.avi.iberia.local. Select the full column by clicking on the corresponding dns_ips field, in this case, column header marked as BJ a shown below:

And now let google sheets to do the trick for us. Google Sheets has some automatic exploration capabilities to suggest some cool visualization graphics for the selected data. Just locate the Explore button at the bottom right of the spreadsheet

When completed, Google Sheet offers the following visualization graphs.

For the purpose of describing how the traffic is split across datacenters, the PieChart or the Frequency Histogram can be very useful. Add the suggested graphs into the SpreadSheet and after some little customization to show values and changing the Title you can get this nice graphics. The 6:3:1 fits perfectly with the expected behaviour.

A use case for the trafficSplit feature might be the implementation of a Canary Deployment strategy. With canary deployment, you deploy a new application code in a small part of the production infrastructure. Once the application is signed off for release, only a few users are routed to it. This minimizes any impact or errors. I will change the ratio to simulate a Canary Deployment by directing just a small portion of traffic to the Site2 which would be the site in which deploying the new code. If you change the will change to get an even distribution of 20:20:1 as shown below. With this setting the theoretical traffic sent to the Canary Deployment test would be 1/(20+20+1)=2,4%. Let’s see how it goes.

trafficSplit:

- cluster: s1az1

weight: 20

- cluster: s1az2

weight: 20

- cluster: s2

weight: 1

Remember everytime we change this setting in AMKO the full GSLB service is refreshed including the TTL setting. Set the TTL to 1 for the GSLB service again to speed up the expiration of the DNS cache and repeat the test.

dnsperf -d queryfile.txt -s 10.10.24.186 -n 5000 -Q 100If you export and process the logs in the same way you will get the following results

Invalid DNS Query Processing and curl

I have created this section a because although it seems irrelevant it can cause unexpected behavior depending on the application we use to establish the HTTP sessions. AVI DNS Virtual Service has two different settings to respond to Invalid DNS Queries. You can see the options by going to the System-DNS profile attached to our Virtual Service.

The most typical setting for the Invalid DNS Query Processing is to configure the server to “Respond to unhandled DNS requests” to actively send a NXDOMAIN answer for those queries that cannot be resolved by the AVI DNS.

Let’s give a try to the second method which is “Drop unhandled DNS requests“. After configuring it and save it we will, if you use curl to open a HTTP connection to the target site, in this case hello.avi.iberia.local , you realize it takes some time to receive the answer from our server.

curl hello.avi.iberia.local

< after a few seconds... >

<!DOCTYPE html>

<html>

<head>

<title>Hello Kubernetes!</title>

<link rel="stylesheet" type="text/css" href="/css/main.css">

<link rel="stylesheet" href="https://fonts.googleapis.com/css?family=Ubuntu:300" >

</head>

<body>

<div class="main">

<img src="/images/kubernetes.png"/>

<div class="content">

<div id="message">

MESSAGE: This service resides in SITE1 AZ1

</div>

<div id="info">

<table>

<tr>

<th>pod:</th>

<td>hello-6b9797894-m5hj2</td>

</tr>

<tr>

<th>node:</th>

<td>Linux (4.15.0-128-generic)</td>

</tr>

</table>

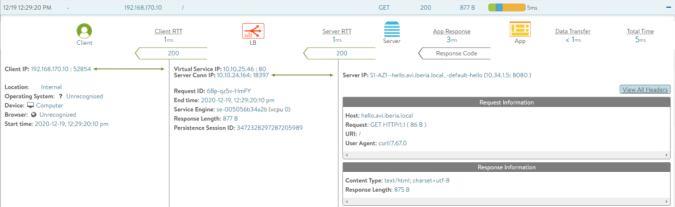

</body>If we look into the AVI log for the request we can see how the request has been served very quickly in some milliseconds, so it seems there is nothing wrong with the Virtual Service itself.

But if we look a little bit deeper by capturing traffic at the client side we can see what has happened.

As you can see in the traffic capture above, the following packets has been sent as part of the attampt to estabilish a connection using curl to the intended URL at hello.avi.iberia.local:

- The curl client sends a DNS request type A asking for the fqdn hello.avi.iberia.local

- The curl client sends a second DNS request type AAAA (asking for an IPv6 resolution) for the same fqdn hello.avi.iberia.local

- The DNS answers some milliseconds after with the A type IP address resolution = 10.10.25.46

- Five seconds after, since curl has not received an answer for the AAAA type query, curl reattempts sending both type A and type AAAA queries one more time.

- The DNS answers again very quickly with the A type IP address resolution = 10.10.25.46

- Finally the DNS sends a Server Failure indicating theres is no response for AAAA type hello.avi.iberia.local

- Only after this the curl client start the HTTP connection to the URL

As you can see, the fact that the AVI DNS server is dropping the traffic is causing the curl implementation to wait up to 9 seconds until the timeout is reached. We can avoid this behaviour by changing the setting in the AVI DNS Virtual Service.

Configure again the AVI DNS VS to “Respond to unhandled DNS requests” as shown below.

Now we can check how the behaviour has now changed.

As you can see above, curl receives an inmediate answer from the DNS indicating that there is no AAAA record for this domain so the curl can proceed with the connection.

Whereas in the AAAA type record the AVI now actively responses with a void Answer as shown below.

You can also check the behaviour using dig and querying for the AAAA record for this particular FQDN and you will get a NOERROR answer as shown below.

dig AAAA hello.avi.iberia.local

; <<>> DiG 9.16.1-Ubuntu <<>> AAAA hello.avi.iberia.local

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 60975

;; flags: qr aa; QUERY: 1, ANSWER: 0, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4000

;; QUESTION SECTION:

;hello.avi.iberia.local. IN AAAA

;; Query time: 4 msec

;; SERVER: 10.10.0.10#53(10.10.0.10)

;; WHEN: Mon Dec 21 09:03:05 CET 2020

;; MSG SIZE rcvd: 51

Summary

We have now a fair understanding on how AMKO actually works and some techniques for testing and troubleshooting. Now is the time to explore AVI GSLB capabilities for creating a more complex GSLB hierarchy and distribute the DNS tasks among different AVI controllers. The AMKO code is rapidly evolving and new features has been incorporated to add extra control related to GSLB configuration such as changing the DNS algorithm. Stay tuned for further articles that will cover this new available functions.