Introducing the Ingress Object

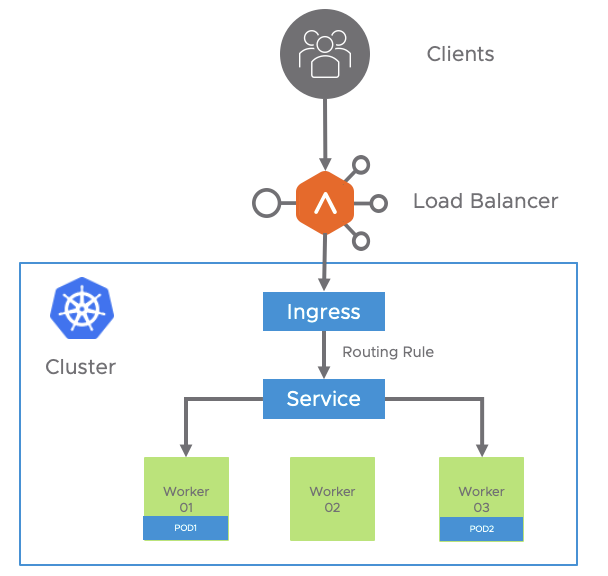

In that section we will focus on an specific K8s resource called Ingress. The ingress is just another k8s object that manages external access to the services in a cluster, typically HTTP(S). The ingress resource exposes HTTP and HTTPS routes from outside the cluster and points to services within the cluster. Traffic routing is controlled by rules that are defined as part of the ingress specification.

An Ingress may be configured to provide k8s-deployed applications with externally-reachable URLs, load balance traffic, terminate SSL / TLS, and offer name-based virtual hosting. The ingress controller (AKO in our case) is is responsible for fulfilling the Ingress with the external AVI Service Engines to help handle the traffic. An Ingress service does not expose arbitrary ports or protocols and is always related to HTTP/HTTPS traffic. Exposing services other than HTTP/HTTPS like a Database or a Syslog service to the internet tipically uses a service of type NodePort or LoadBalancer.

To create the ingress we will use a declarative yaml file instead of kubectl imperative commands this is time since is the usual way in a production environment and give us the chance to understand and modify the service definition just by changing the yaml plain text. In this case I am using Kubernetes 1.18 and this is how a typical ingress definition looks like:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: myservice

spec:

rules:

- host: myservice.example.com

http:

paths:

- path: /

backend:

serviceName: myservice

servicePort: 80As with other kubernetes declarative file, we need apiVersion, kind and metadata to define the resource. The ingress spec will contain all the information rules needed to configure our AVI Load Balancer, in this case the protocol http, the name of the host (must be a resolvable DNS name) and the routing information such as the path and the backend that is actually terminating the traffic.

AKO needs a service of type ClusterIP (default service type) acting as backend to send the ingress requests to. In a similar way the deployment and the service k8s resources can be also defined declaratively by using a corresponding yaml file. Let’s define a deployment of an application called hackazon. Hackazon is an intentionally vulnerable machine that pretends to be an online store and that incorporates some technologies that are currently used: an AJAX interface, a realistic e-commerce workflow and even a RESTful API for a mobile application. The deployment and service definition will look like this:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hackazon

labels:

app: hackazon

spec:

replicas: 3

selector:

matchLabels:

app: hackazon

template:

metadata:

labels:

app: hackazon

spec:

containers:

- image: mutzel/all-in-one-hackazon:postinstall

name: hackazon

ports:

- containerPort: 80

name: http

---

apiVersion: v1

kind: Service

metadata:

name: hackazon

spec:

selector:

app: hackazon

ports:

- port: 80

targetPort: 80As you can see above, in a single file we are describing the Deployment with several configuration elements such as the number of replicas, the container image we are deploying, the port… etc. Also at the bottom of the file you can see the Service definition that will create an abstraction called ClusterIP that will represent the set of pods under the hackazon deployment.

Once the yaml file is created we can launch the configuration by using kubectl apply command.

kubectl apply -f hackazon_deployment_service.yaml

deployment.apps/hackazon created

service/hackazon createdNow we can check the status of our services using kubectl get commands to verify what objects has been created in our cluster. Note that the Cluster IP is using an internal IP address and it’s only reachable internally.

kubectl get pods

NAME READY STATUS RESTARTS AGE

hackazon-b94df7bdc-4d7bd 1/1 Running 0 66s

hackazon-b94df7bdc-9pcxq 1/1 Running 0 66s

hackazon-b94df7bdc-h2dm4 1/1 Running 0 66s

kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hackazon ClusterIP 10.99.75.86 <none> 80/TCP 78sAt this point I would like to introduce, just to add some extra fun, an interesting graphical tool for kubernetes cluster management called Octant that can be easily deployed and is freely available at https://github.com/vmware-tanzu/octant. Octant can be easily installed in the OS of your choice. Before using it you need to have access to a healthy k8s cluster. You can check it by using the cluster-info command. The output should show something like this:

kubectl cluster-info

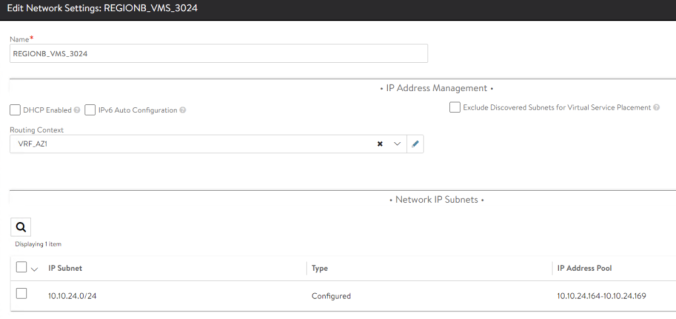

Kubernetes master is running at https://10.10.24.160:6443

KubeDNS is running at https://10.10.24.160:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.Using Octant as K8s dashboard

Once the above requirement is fulfilled you just need to install and execute octant using the instructions provided in the octant website. The tool is accesed via web at http://127.0.0.1:7777. You can easily check the Deployment, Pods and ReplicaSets status from Workloads > Overview

And also you can verify the status of the ClusterIP service we have created from Discovery and Load Balancing > Services

Once Octant is deployed, let’s move to the ingress service. In that case we will use the following yaml file to declare the ingress service that will expose our application.

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: hackazon

labels:

app: hackazon

spec:

rules:

- host: hackazon.avi.iberia.local

http:

paths:

- path: /

backend:

serviceName: hackazon

servicePort: 80I will use the Apply YAML option at the top bar of the Octant Interface to push the configuration into the K8s API. When we press the Apply button a message confirming an Ingress service has been created appears as a top bar in the foreground screen of the UI.

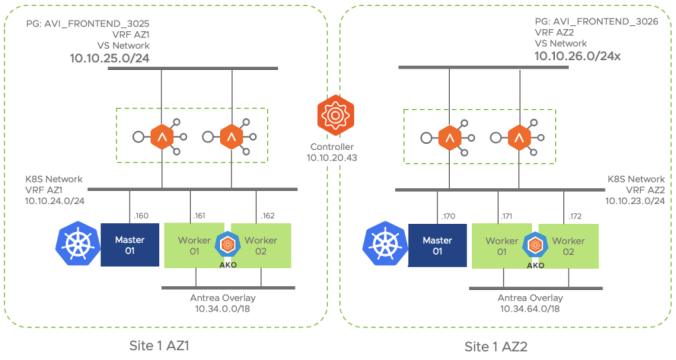

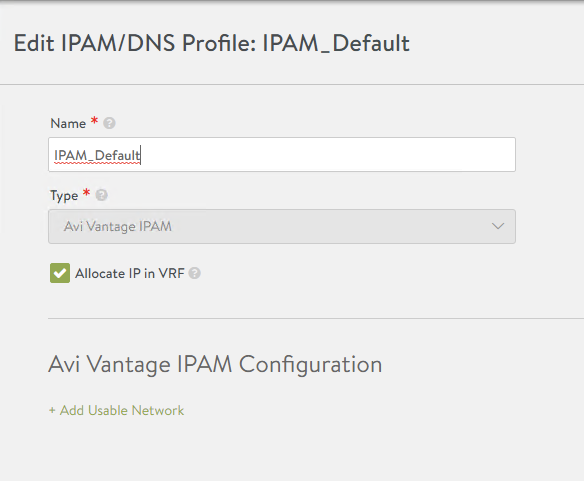

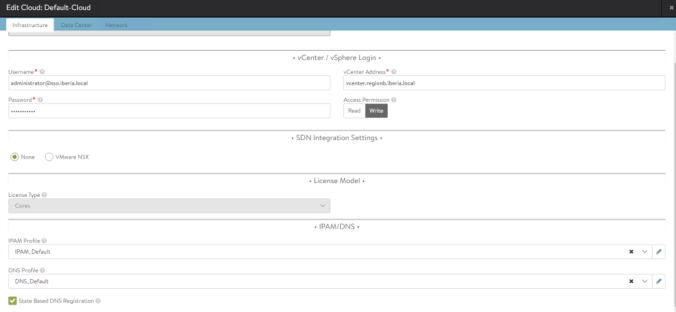

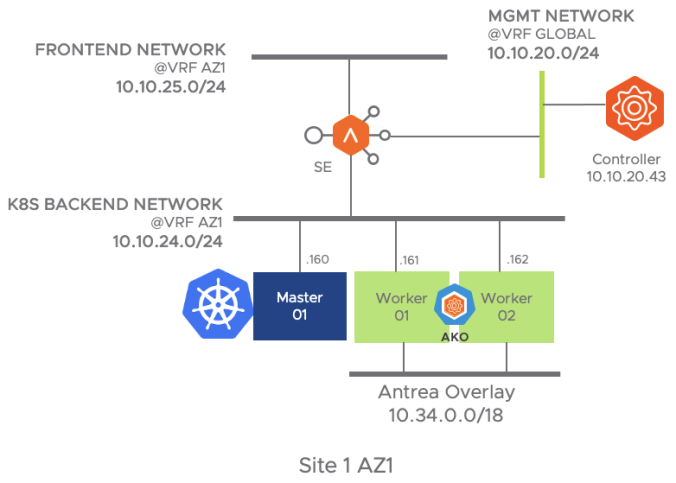

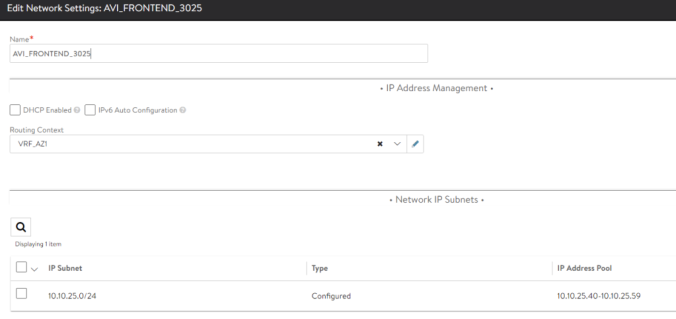

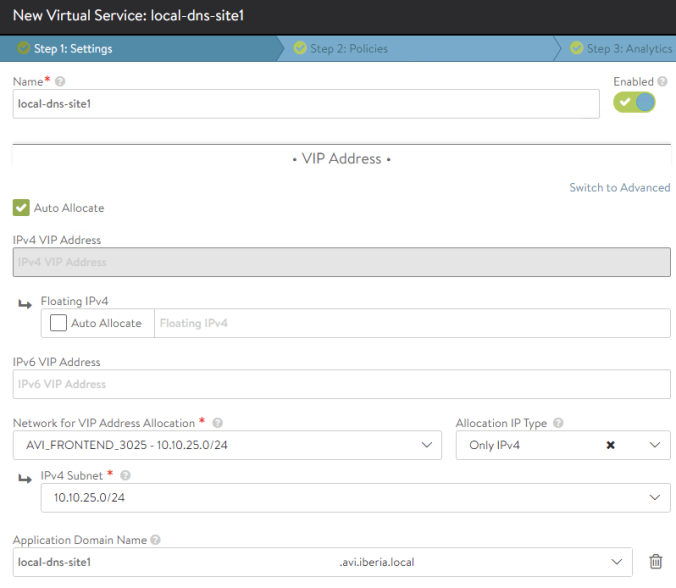

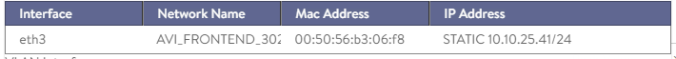

After applying, we can see how our new Ingress object has been created and, as you can see, our AKO integration must have worked since we have an external IP address assigned of the our frontend subnet at 10.10.25.46 which is an indication of sucessfull dialogue between AKO controller and the API endpoint of the AVI Controller.

Octant is a great tool that provides a nice representation of how the different k8s objects are related each other. If we click on our hackazon service and go to the Resource Viewer option this is the graphical view of services, replicaset, ingress, deployment, pods… etc.

Now let’s move to the AKO piece. As mentioned AKO will act as an ingress controller and it should translate the resources of kind Ingress into the corresponding external Service Engine (Data Path) configuration that will cope with the traffic needs.

Exploring AKO Logs for Ingress Creation

If we look into the logs the AKO pod has producing we can notice the following relevant events has ocurred:

# A new ingress object is created. Attributes such as hostname, port, path are passed in the API Request

2020-12-14T13:19:20.316Z INFO nodes/validator.go:237 key: Ingress/default/hackazon, msg: host path config from ingress: {"PassthroughCollection":null,"TlsCollection":null,"IngressHostMap":{"hackazon.avi.iberia.local":[{"ServiceName":"hackazon","Path":"/","Port":80,"PortName":"","TargetPort":0}]}}

# An existing VS object called S1-AZ1--Shared-L7-0 will be used as a parent object for hosting this new Virtual Service

2020-12-14T13:19:20.316Z INFO nodes/dequeue_ingestion.go:321 key: Ingress/default/hackazon, msg: ShardVSPrefix: S1-AZ1--Shared-L7-

2020-12-14T13:19:20.316Z INFO nodes/dequeue_ingestion.go:337 key: Ingress/default/hackazon, msg: ShardVSName: S1-AZ1--Shared-L7-0

# A new server Pool will be created

2020-12-14T13:19:20.316Z INFO nodes/avi_model_l7_hostname_shard.go:37 key: Ingress/default/hackazon, msg: Building the L7 pools for namespace: default, hostname: hackazon.avi.iberia.local

2020-12-14T13:19:20.316Z INFO nodes/avi_model_l7_hostname_shard.go:47 key: Ingress/default/hackazon, msg: The pathsvc mapping: [{hackazon / 80 100 0}]

2020-12-14T13:19:20.316Z INFO nodes/avi_model_l4_translator.go:245 key: Ingress/default/hackazon, msg: found port match for port 80

# The pool is populated with the endpoints (Pods) that will act as pool members for that pool.

2020-12-14T13:19:20.316Z INFO nodes/avi_model_l4_translator.go:263 key: Ingress/default/hackazon, msg: servers for port: 80, are: [{"Ip":{"addr":"10.34.1.5","type":"V4"},"ServerNode":"site1-az1-k8s-worker02"},{"Ip":{"addr":"10.34.1.6","type":"V4"},"ServerNode":"site1-az1-k8s-worker02"},{"Ip":{"addr":"10.34.2.6","type":"V4"},"ServerNode":"site1-az1-k8s-worker01"}]

2020-12-14T13:19:20.317Z INFO objects/avigraph.go:42 Saving Model :admin/S1-AZ1--Shared-L7-0

# The IP address 10.10.25.46 has been allocated for the k8s ingress object

2020-12-14T13:19:21.162Z INFO status/ing_status.go:133 key: admin/S1-AZ1--Shared-L7-0, msg: Successfully updated the ingress status of ingress: default/hackazon old: [] new: [{IP:10.10.25.46 Hostname:hackazon.avi.iberia.local}]

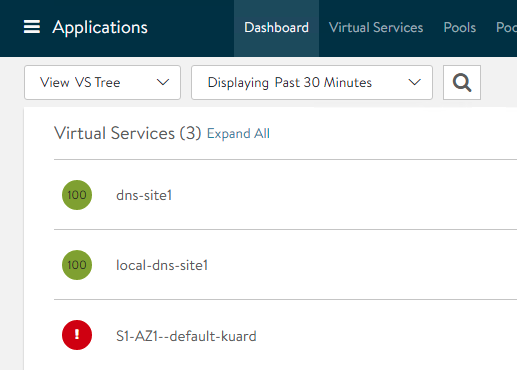

Exploring Ingress realization at AVI GUI

Now we can explore the AVI Controller to see how this API calls from the AKO are being reflected on the GUI.

For insecure ingress objects, AKO uses a sharding scheme, that means some configuration will be shared across a single object aiming to save public IP addressing space. The configuration objects that are created in SE are listed here:

- A Shared parent Virtual Service object is created. The name is derived from

–Shared-L7- . In this case cluster name is set in the values.yaml file and corresponds to S1-AZ1 and the allocated ID is 0. - A Pool Group Object that contains a single Pool Member. The Pool Group Name is derived also from the cluster name <cluster_name>–hostname

- A priority label that is associated with the Pool Group with the name host/path. In this case hackazon.avi.iberia.local/

- An associated DataScript object to interpret the host/path combination of the incoming request and the pool will be chosen based on the priority label

You can check the DataScript automatically created in Templates > Scripts > DataScript. The content is showed bellow. Basically it extracts the host and the path from the incoming http request and selects the corresponding pool group.

host = avi.http.get_host_tokens(1)

path = avi.http.get_path_tokens(1)

if host and path then

lbl = host.."/"..path

else

lbl = host.."/"

end

avi.poolgroup.select("S1-AZ1--Shared-L7-0", string.lower(lbl) )By the way, note that the Shared Virtual object is displayed in yellow. The reason behind that color is because this is a composite health status obtained from several factors. If we hover the mouse over the Virtual Service object we can see two factors that are influencing this score of 72 and the yellow color. In that case there is a 20 points penalty due to the fact this is an insecure virtual service and also a decrement of 5 related to resource penalty associated with the fact that this is an very young service (just created). This metrics are used by the system to determine the optimal path of the traffic in case there are different options to choose.

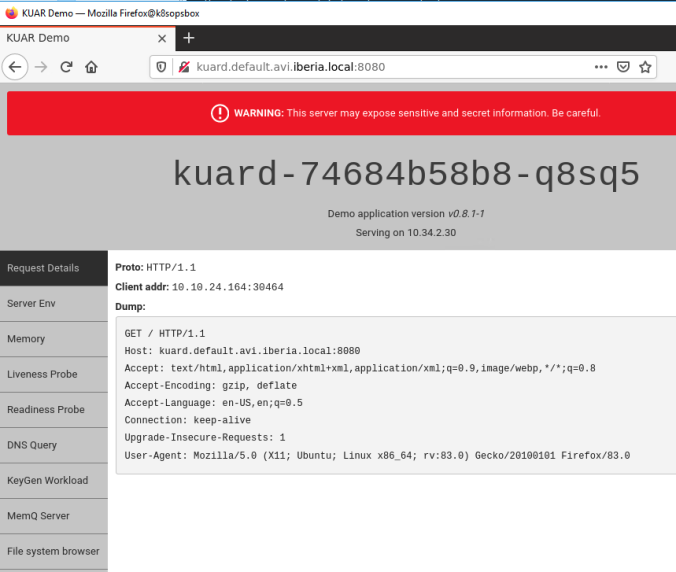

Let’s create a new ingress using the following YAML file. This time we will use the kuard application. The content of the yaml file that defines the Deployment, Service and Ingress objects is showed below:

apiVersion: apps/v1

kind: Deployment

metadata:

name: kuard

labels:

app: kuard

spec:

replicas: 3

selector:

matchLabels:

app: kuard

template:

metadata:

labels:

app: kuard

spec:

containers:

- image: gcr.io/kuar-demo/kuard-amd64:1

name: kuard

ports:

- containerPort: 8080

name: http

---

apiVersion: v1

kind: Service

metadata:

name: kuard

spec:

selector:

app: kuard

ports:

- port: 80

targetPort: 8080

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: kuard

labels:

app: kuard

spec:

rules:

- host: kuard.avi.iberia.local

http:

paths:

- path: /

backend:

serviceName: kuard

servicePort: 80Once applied using the kubectl -f apply command we can see how a new Pool has been created under the same shared Virtual Service object

As you can see the two objects are sharing the same IP address. This is very useful to save public IP addresses. The DataScript will be in charge of routing the incoming requests to the right place.

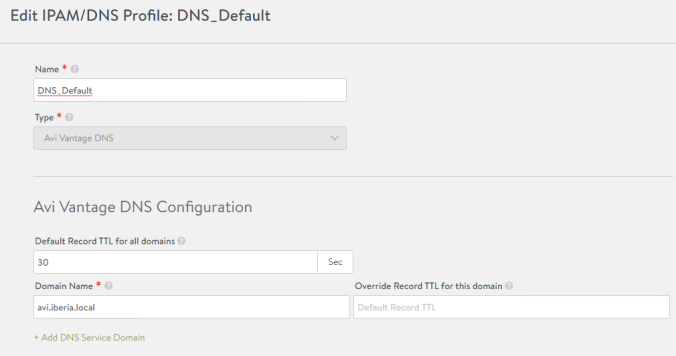

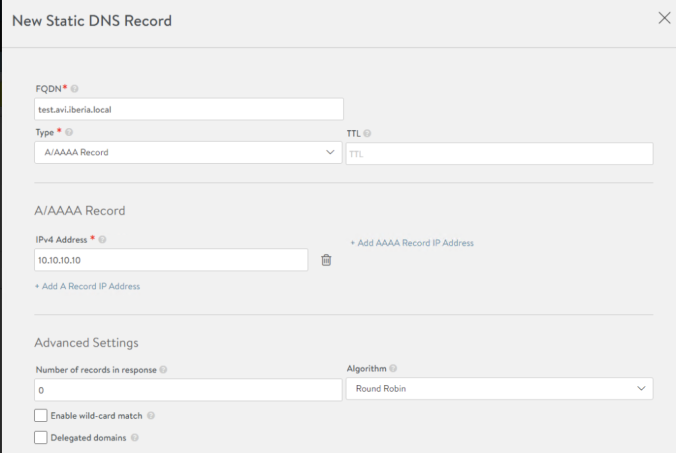

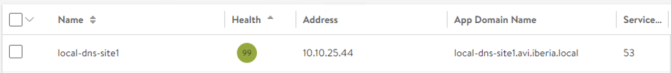

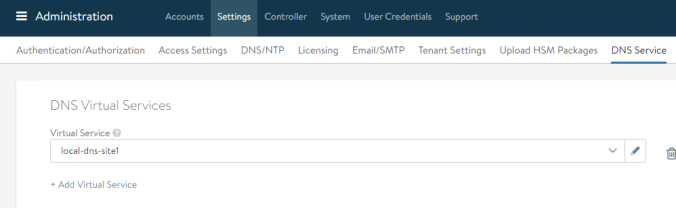

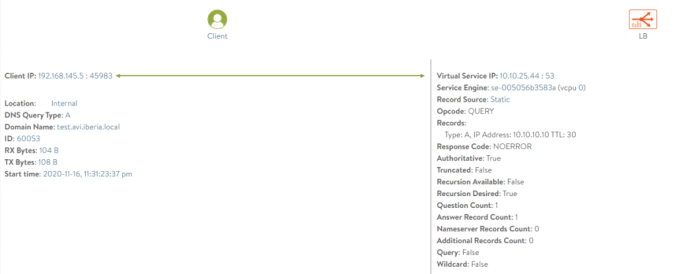

Last verification. Let’s try to resolve the hostnames using the integrated DNS in AVI. Note how both querys resolves to the same IP address since we are sharing the Virtual Service object. There are other options so share the parent VS among the different ingress services. The default option is using hostname but you can define a sharding scheme based on the namespace as well.

dig hackazon.avi.iberia.local @10.10.25.40 +noall +answer

hackazon.avi.iberia.local. 5 IN A 10.10.25.46

dig kuard.avi.iberia.local @10.10.25.40 +noall +answer

kuard.avi.iberia.local. 5 IN A 10.10.25.46The final step is to open a browser and check if our applications are actually working. If we point our browser to the FQDN at http://hackazon.avi.iberia.local we can see how the web application is launched.

We can do the same for the other application by pointing at http://kuard.avi.iberia.local

Note that the browsing activity for both applications that share the same Virtual Service construct will appear under the same Analytics related to the S1-AZ1–Shared-L7-0 parent VS object.

If we need to focus on just one of the applications we can filter using, for example, Host Header attribute the Log Analytics ribbon located at the right of the Virtual Services > S1-AZ1–Shared-L7-0 > Logs screen.

If we click on the hackazon.avi.iberia.local Host we can see all hackazon site related logs

That’s all for now for the insecure objects. Let’s move into the next section to explore the secure ingress services.