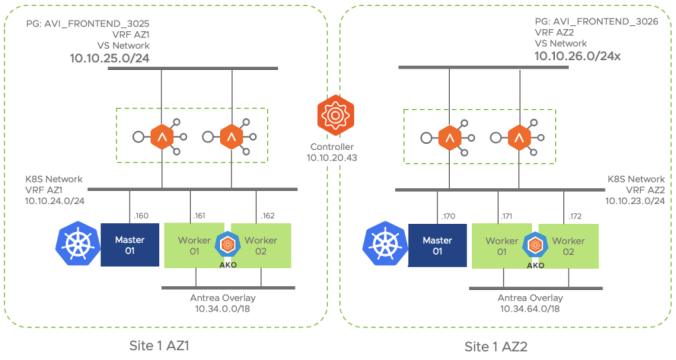

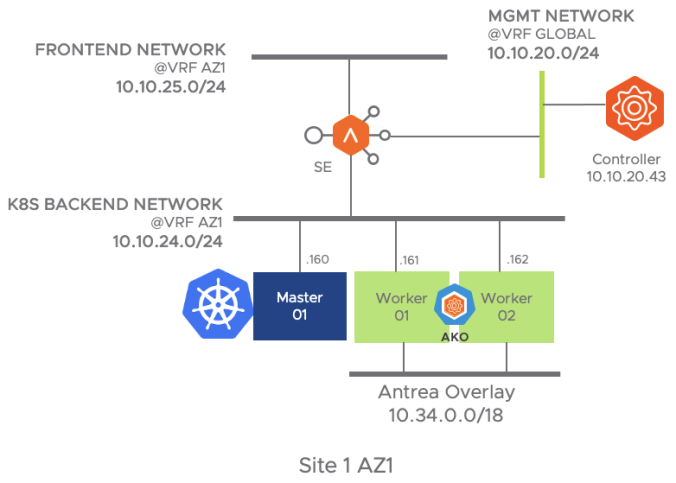

The very first step for start using NSX Advanced Load Balancer (a.k.a AVI Networks) is to prepare the infrastructure. The envisaged topology is represented in the figure below. I will simulate two K8S cluster environment that might represent two availability zones (AZ) in the same site. Strictly speaking an availability zone must be a unique physical location within a region equipped with independent power, cooling, and networking. For the sake of simplicity we will simulate that condition over a single vCenter Datacenter and under the very same physical infrastructure. I will focus in a single-region multi-AZ scenario that will evolve to a multi-region in subsequents part of this blog series.

AVI proposes a very modern Load Balancing architecture in which the Control plane (AVI Controller) is separated from the Data Plane (AVI Service Engines). The Data Plane is created on demand as you create Virtual Services. To spin up the Service Engines in an automated fashion, AVI Controllers uses a Cloud Provider entity that will provide the compute requirements to bring the Data Plane up. This architectural model in which the brain is centralized embraces very well VMware’s Virtual Cloud Network strategy around Modern Network Solutions: “any app , any platform, any device” that aims to extend universally the network services (load balancing in this case) to virtually anywhere regardless where our application exists and what cloud provider we are using.

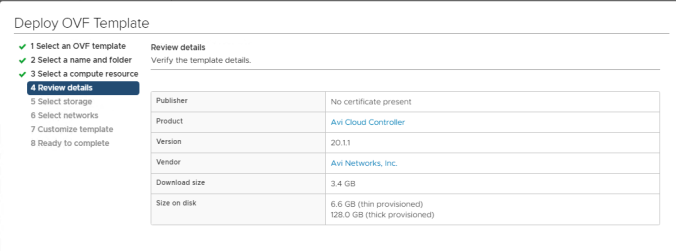

Step 1: AVI Controller Installation

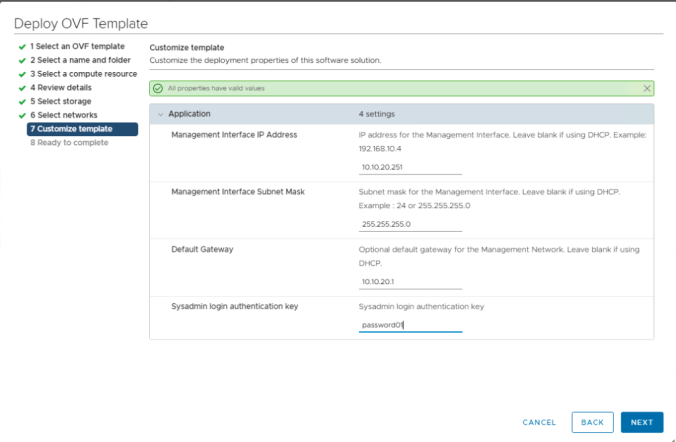

AVI Controller installation is quite straightforward. If you are using vSphere you just need the OVA file to install the Controller VM deploying it from vCenter client. Deploy a new VM deploying a OVF in the desired infrastructure.

Complete all the steps with your particular requirements such as Cluster, Folder, Storage, Networks… etc. In the final step there is a Customize template to create some base configuration of the virtual machine. The minimum requirements for the AVI controllers are 8vCPU, 24 GB vRAM and 128 GB vHDD.

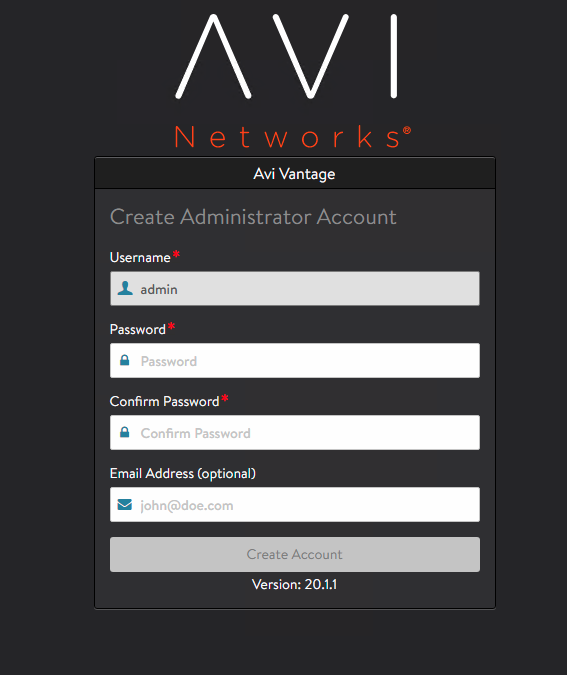

When the deployment is ready power on the created Virtual Machine and wait some minutes till the boot process completes, then connect to the Web interface at https://<AVI_ip_address> using the Management Interface IP Address you selected above.

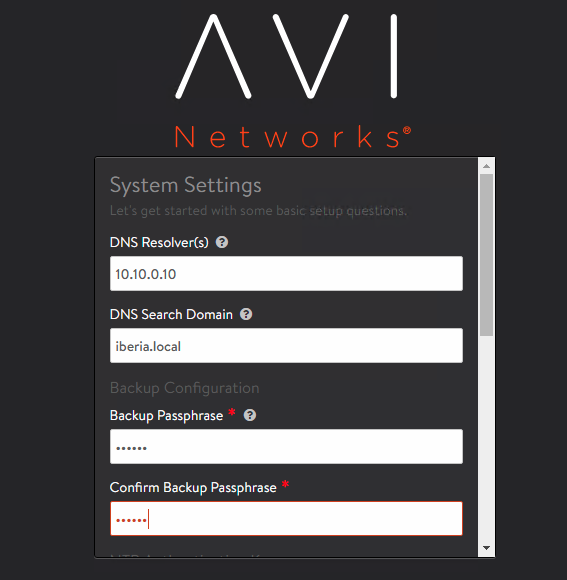

Add the Network information, DNS, NTP… etc as per your local configuration.

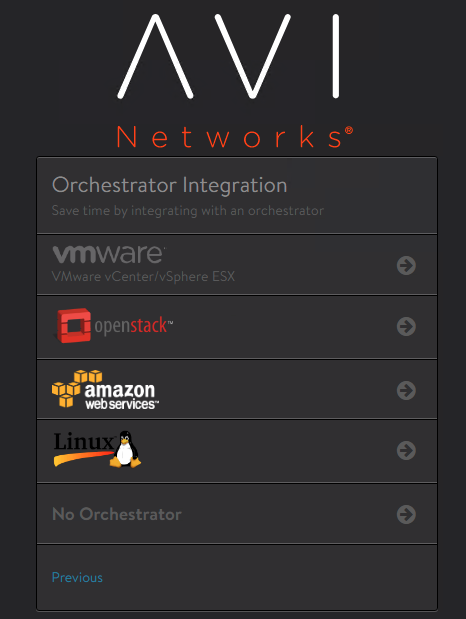

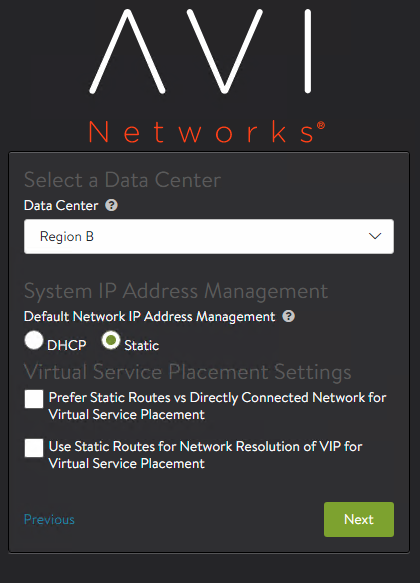

Next you will get to the Orchestrator Integration page. We are using here VMware vSphere so click the arrow in the VMware tile to proceed with vCenter integration.

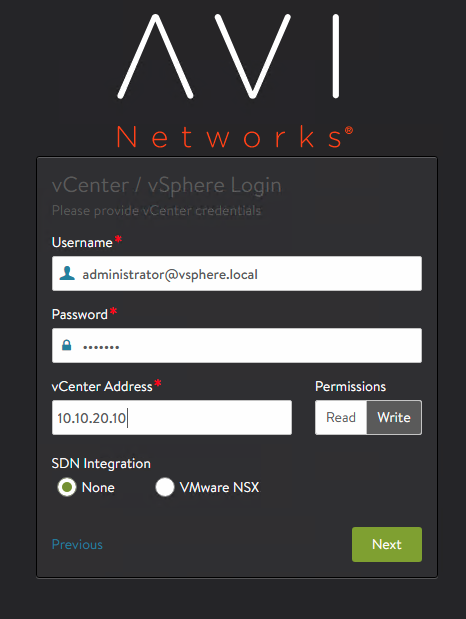

Populate the username, password and fqdn of the vcenter server

Select write mode and left the rest of the configuration with the default values.

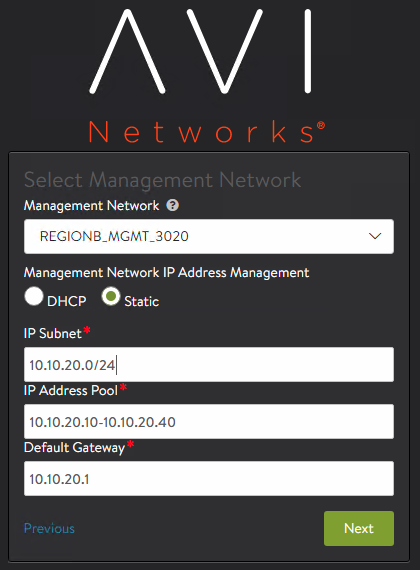

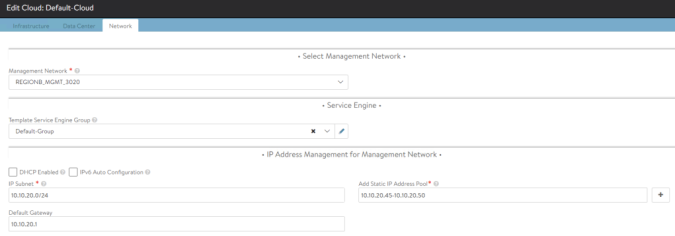

Select the Management Network that will be used for Service Engine to establish connectivity with the AVI Controller. If using Static you need to define Subnet, Address Pool and the Default Gateway.

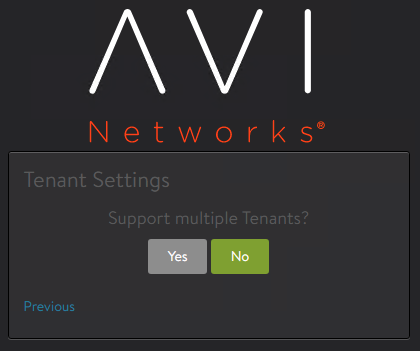

The final step asks if we want to support multiple Tenants. We will use a single tenant model so far. The name of the tenant will be admin.

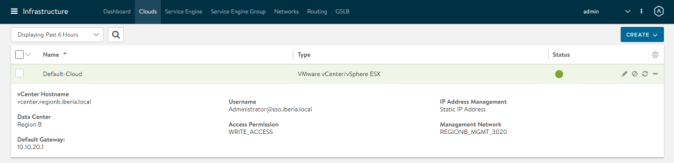

Once the initial wizard is complete we should be able to get into the GUI and go to Infrastructure > Clouds and click on + symbol at the right of the Default-Cloud (this is the default name assigned to our vCenter integration). You should be able to see a green status icon showing the integration has suceeded as weel as the configuration paramenters.

Now that the AVI Controller has been installed and the base cloud integration is already done, let’s complete the required steps to get our configuration done. These are the steps needed to complete the configuration. Note: At the time of writing this article the AKO integration is supported on vCenter full-access and the only supported networks for Service Engine placements are PortGroup (VLAN-backed) based. Check regularly the Release Notes here.

Step 2: IPAM and DNS

AVI is a Swiss Army knife solution that can provide not only load-balancing capabilities but also can cover other important peripheral services such as IPAM and DNS. The IPAM is needed to assign IP addressing automatically when a new Virtual Service is created and the DNS module will register the configured Virtual Service FQDN in an internal DNS service that can be queried allowing server name resolution. We need to attach an IPAM and DNS profile to the Cloud vCenter integration in order to activate those services.

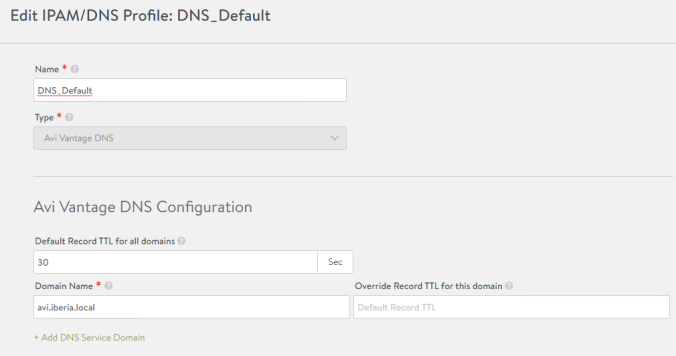

From the AVI GUI we go to Templates > IPAM/DNS Profiles > CREATE > DNS Profile and name it DNS_Default for example.

I will use avi.iberia.local as my default domain. Another important setting is the TTL. The DNS TTL (time to live) is a setting that tells the DNS resolver how long to cache a query before requesting a new one. The shorter the TTL, the shorter amount of time the resolver holds that information in its cache. The TTL might impact in the amount of query volume (i.e traffic) that will be directed to the DNS Virtual Service. For records that rarely changes such as MX the TTL normally ranges from 3600 to 86400. For dynamic services it’s best to keep the TTL a little bit shorter. Typically values shorter than 30 seconds are not understood for most of recursive servers and the results might be not favorable in a long run. We will keep 30 seconds as default so far.

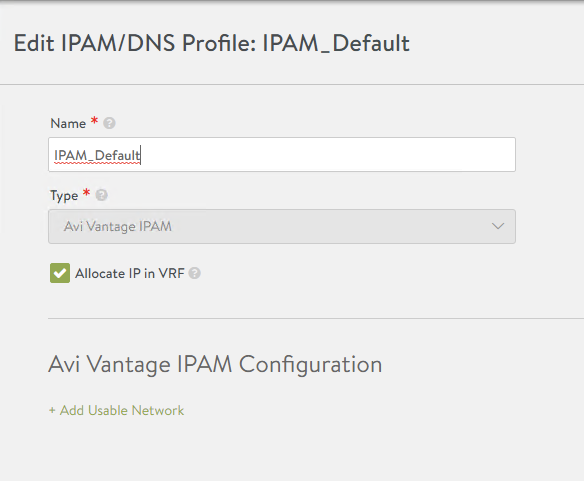

Similarly now we go to Templates > IPAM/DNS Profiles > CREATE > IPAM Profile

Since we will use VRFs to isolate both K8s clusters we check the “Allocate IP in VRF” option. There’s no need to add anything else at this stage.

Step 3: Configure the Cloud

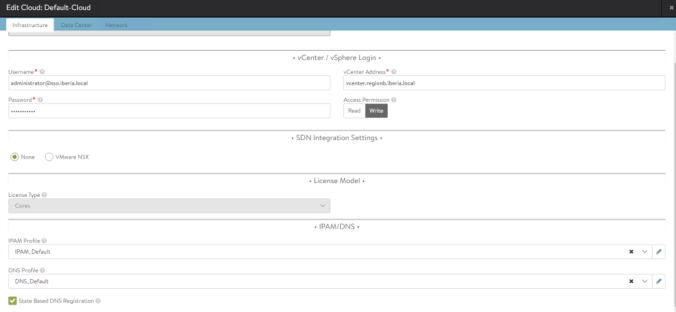

Now it’s time to attach this profiles to the Cloud integration with vCenter. From the AVI GUI: Infrastructure > Default-Cloud > Edit (pencil icon).

Next assign the just created DNS and IPAM Profile in the corresponding section IPAM/DNS at the bottom of the window. The State Based DNS Registration is an option to allow the DNS service to monitor the operational state of the VIPs and create/delete the DNS entries correspondingly.

We also need to check the Management Network as we defined in the AVI Controller Installation. This network is intended for control plane and management functions so there’s no need to place it in any of the VRF that we will use for Data Path. In our case we will use a network which corresponds to a vCenter Port Group called REGIONB_MGMT_3020 as defined during the initial setup wizard. In my case I have allocated an small range of 6 IPs since this is a test environment and a low number of SEs will be spin up. Adjust according to your environment.

Step 4: Define VRFs and Networks

When multiple K8S clusters are in place it’s a requirement to use VRFs as a method of isolation from a routing perspective of the different clusters. Note that the automatic discovery process of networks (e.g PortGroups) in the compute manager (vCenter in this case) will place them into the default VRF which is the global VRF. In order to achieve isolation we need to assign the discovered networks manually into the corresponding VRFs. In this case I will use two VRFs: VRF_AZ1 for resources that will be part of AZ1 and VRF_AZ2 for resources that will be part of AZ2. The envisaged network topology (showing only Site 1 AZ1) once any SE is spin up will look like this:

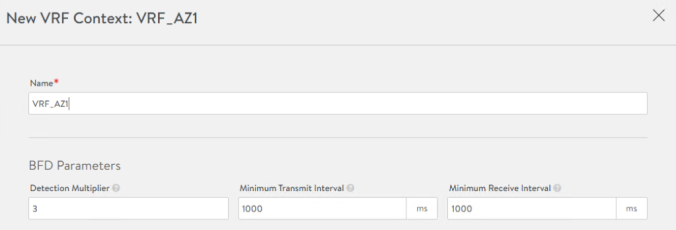

From the AVI GUI Go to Infrastructure > Routing > VRF Context > Create and set a new VRF with the name VRF_AZ1

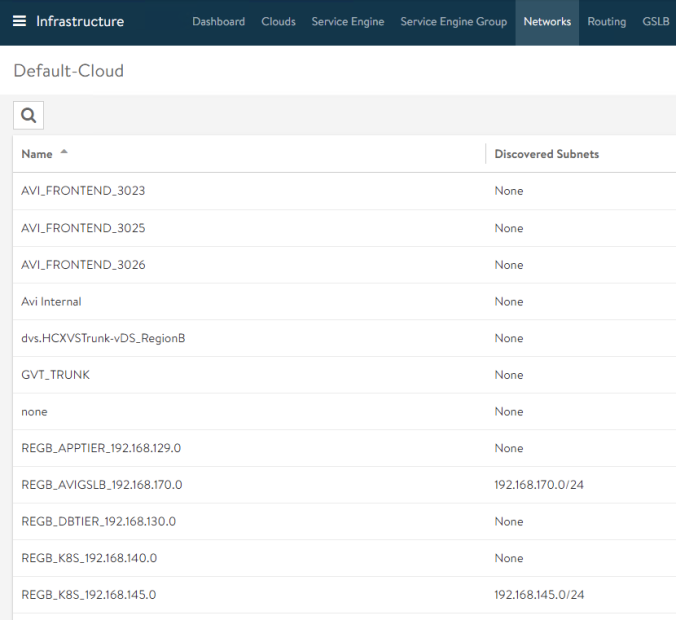

Now, having in mind our allocated networks for FRONTEND and BACKEND as in the previous network topology figure, we have to identify the corresponding PortGroups discovered by AVI Controller as part of the vCenter Cloud Integration. If I go to Infrastructure > Networks we can see the full list of discovered networks (port groups) as well as their current subnets.

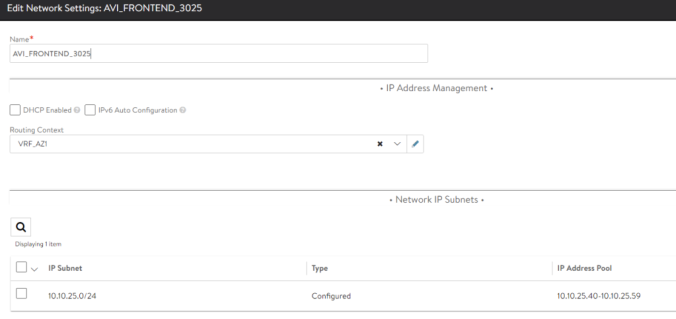

In that case the PortGroup for front-end (e.g where we expose the Virtual Services externally) networks is named AVI_FRONTEND_3025. If we edit using the Pencil Icon for that particular entry we can assign the Routing Context (VRF) and, since I am not using DHCP in my network I will manually assign an IP Address Pool. The controller will pick one of the free addresses to plug the vNIC of the SE in the corresponding network. Note: we are using here a two arm deployment in which the frontend network is separated from the Backend network (the network for communicating with backend servers) but there is a One-Arm variant that is also supported.

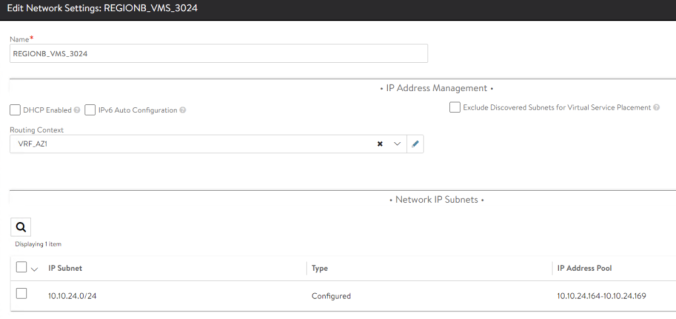

For the backend network we need to do the same configuration changing the Network to REGIONB_VMS_3024 in this case.

Similarly we have to repeat the process with the other VRF completing the configuration as per above table:

| Network_Name | Routing Context | IP Subnet | IP Address Pool | Purpose |

| AVI_FRONTEND_3025 | VRF_AZ1 | 10.10.25.0/24 | 10.10.25.40-10.20.25.59 | VIPs for Site 1 AZ1 |

| REGIONB_VMS_3024 | VRF_AZ1 | 10.10.24.0/24 | 10.10.24.164-10.10.24.169 | SE backend connectivity |

| AVI_FRONTEND_3026 | VRF_AZ2 | 10.10.26.0/24 | 10.10.26.40-10.20.25.59 | VIPs for Site 1 AZ2 |

| REGIONB_VMS_3023 | VRF_AZ2 | 10.10.23.0/24 | 10.10.23.40-10.20.25.59 | SE backend connectivity |

Step 5: Define Service Engine Groups for AKO related Services

The Service Engine Group it’s a logical group with a set of configuration and policies that will be used by the Service Engines as a base configuration. The Service Engine Group will dictates the High Availability Mode, the size of the Service Engines and the metric update frequency among many other settings. The AVI Kubernetes Operator element will own a Service Engine to deploy the related k8s services. Since we are integrating two separated k8s cluster we need to define corresponding Service Engine Groups for each of the AKOs. From the AVI GUI go to Infrastructure > Service Engine Group > CREATE and define the following suggested properties.

| Setting | Value | Tab |

| Service Engine Group Name | S1-AZ1-SE-Group | Basic Settings |

| Metric Update Frequency | Real-Time Metrics Checked, 0 min | Basic Settings |

| High Availability Mode | Elastic HA / N+M (buffer) | Basic Settings |

| Service Engine Name Prefix | s1az1 | Advanced |

| Service Engine Folder | AVI K8S/Site1 AZ1 | Advanced |

| Buffer Service Engines | 0 | Advanced |

Similarly let’s create a second Service Engine Group for the other k8s cluster

| Setting | Value | Tab |

| Service Engine Group Name | S1-AZ2-SE-Group | Basic Settings |

| Metric Update Frequency | Real-Time Metrics Checked, 0 min | Basic Settings |

| High Availability Mode | Elastic HA / N+M (buffer) | Basic Settings |

| Service Engine Name Prefix | s1az2 | Advanced |

| Service Engine Folder | AVI K8S/Site1 AZ2 | Advanced |

| Buffer Service Engines | 0 | Advanced |

Step 6: Define Service Engine Groups for DNS Service

This Service Engine Groups will be used as configuration base for the k8s related services such as LoadBalancer and Ingress, however remember we need to implement also a DNS to allow name resolution in order to resolve the FQDN from the clients trying to access to our exposed services. As a best practique an extra Service Engine Group to implement the DNS related Virtual Services is needed. In this case we will use similar settings for this purpose.

| Setting | Value | Tab |

| Service Engine Group Name | DNS-SE-Group | Basic Settings |

| Metric Update Frequency | Real-Time Metrics Checked, 0 min | Basic Settings |

| High Availability Mode | Elastic HA / N+M (buffer) | Basic Settings |

| Service Engine Name Prefix | dns | Advanced |

| Service Engine Folder | AVI K8S/Site1 AZ1 | Advanced |

| Buffer Service Engines | 0 | Advanced |

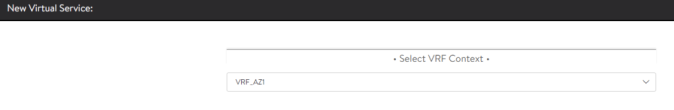

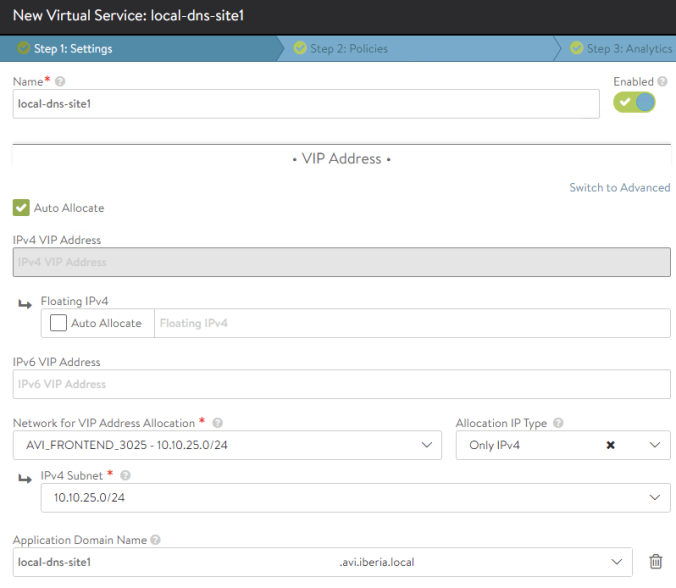

Once done, we can now define our first Virtual Service to serve the DNS queries. Let’s go to Applications > Dashboard > CREATE VIRTUAL SERVICE > Advanced Setup. To keep it simple I will reuse in this case the Frontend Network at AZ1 to place the DNS service and, therefore, the VRF_AZ1. You can choose a dedicated VRF or even the global VRF with the required Network and Pools.

Since we are using the integrated AVI IPAM we don’t need to worry about IP Address allocation. We just need to select the Network in which we want to deploy the DNS Virtual Service and the system will take one free IP from the defined pool. Once setup and in a ready state, the name of the Virtual Service will be used to create a DNS Record type A that will register dinamically the name into the integrated DNS Service.

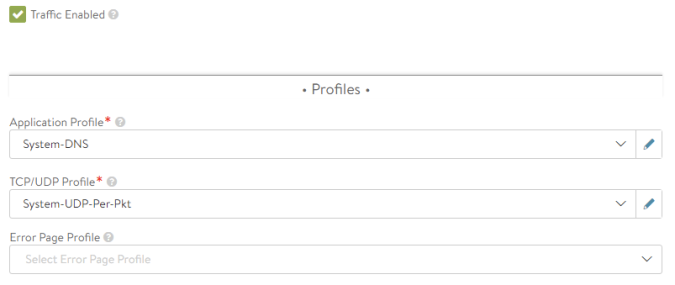

Since we are creating a Service that will answer DNS Queries, we have to change the Application Profile at the right of the Settings TAB, from the default System-HTTP to the System-DNS which is a DNS default specific profile.

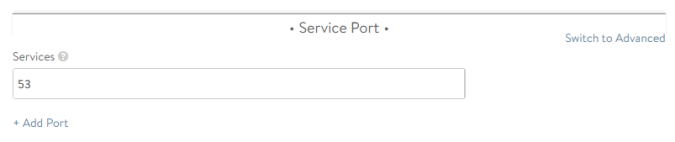

We can tell how the Service Port has now changed from default 80 for System-HTTP to UDP 53 which, as you might know, is the well-known UDP port to listen to DNS queries.

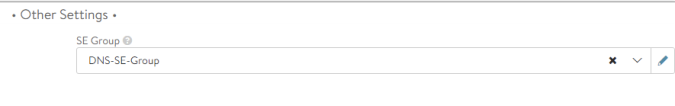

Now if we click on Next till the Step 4: Advanced tab, we will define the SE Group that the system use when spinning up the service engine. We will select the DNS-SE-Group we have just created for this purpose. Remember that we are not creating a Virtual Service to balance across a farm of DNS which is a different story, but we are using the embedded DNS service in AVI so theres no need to assign a Pool of servers for our DNS Virtual service.

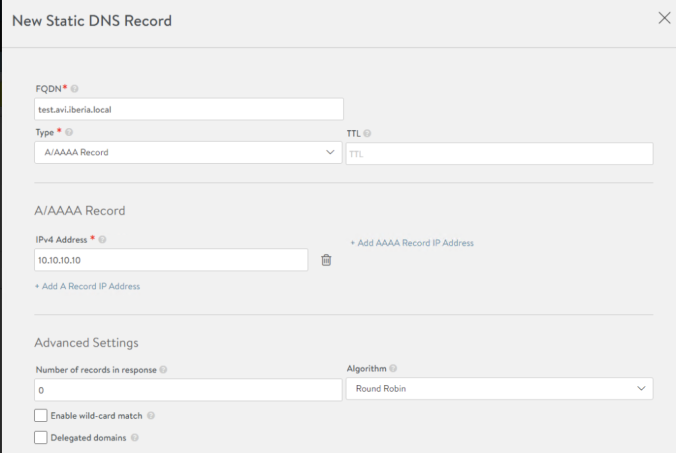

For testing purposes, in the last configuration step lets create a test DNS record such as test.avi.iberia.local

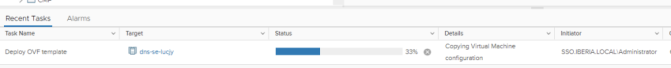

Once done the AVI controller will communicate with vCenter to deploy the needed SE. Note the prefix of the SE match the Service Engine Name Prefix we defined in the Service Engine Group settings. The VM will be placed in the corresponding Folder as per the Service Engine Folder setting within the Service Engine Group configuration.

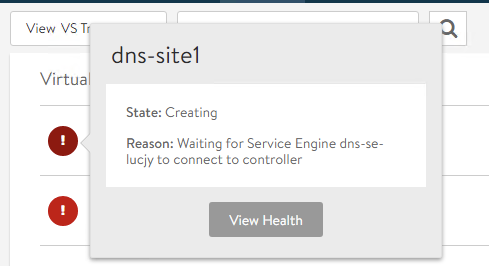

In the Applications > Dashboard section the

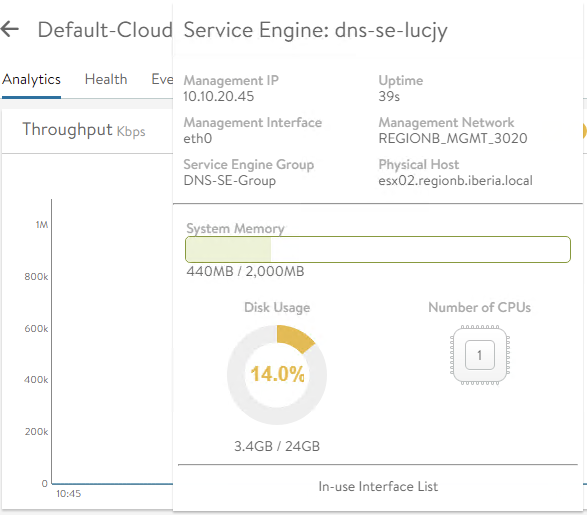

After a couple of minutes we can check the status of the just created Service Engine from the GUI in Infrastructure > Service Engine. Hovering the mouse over the SE name at the top of the screen we can see some properties such as the Uptime, the assigned Management IP, Management Network, Service Engine Group and the physical host the VM is running on.

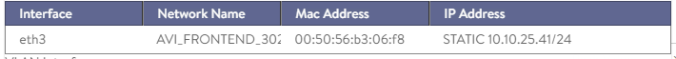

Also if we click in the In-use Interface List at the bottom we can see the IP address assigned to the VM

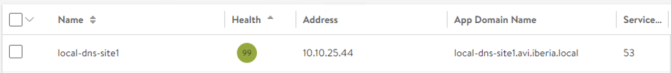

The IP assigned to the VM is not the IP assigned to the DNS VS itself. You can check the assigned IP for the dns-site1 VS from the Applications > Virtual Services page.

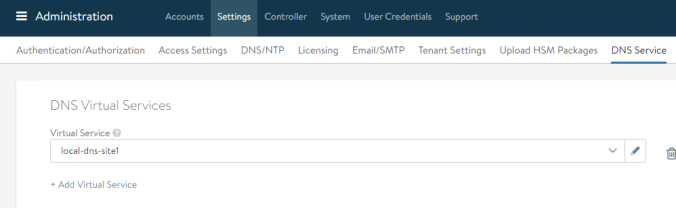

Last step is instructing the AVI controller to use the just created DNS VS when receiving DNS queries. This is done from Administration > Settings > DNS Service and we will select the local-dns-site1 service.

We can now query the A record test.avi.iberia.local using dig.

seiberia@k8sopsbox:~$ dig test.avi.iberia.local @10.10.25.44

; <<>> DiG 9.16.1-Ubuntu <<>> test.avi.iberia.local @10.10.25.44

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 60053

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;test.avi.iberia.local. IN A

;; ANSWER SECTION:

test.avi.iberia.local. 30 IN A 10.10.10.10

;; Query time: 8 msec

;; SERVER: 10.10.25.44#53(10.10.25.44)

;; WHEN: Mon Nov 16 23:31:23 CET 2020

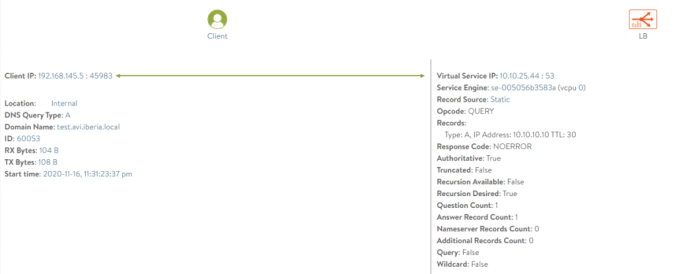

;; MSG SIZE rcvd: 66And remember, one of the coolest features of AVI is the rich analytics. This is the case also for DNS service. As you can see we have rich traceability of the DNS activity. Below you can see how a trace of a DNS query looks like. Virtual Services > local-dns-site1 > Logs (Tick non-Significant Logs radio button)…

At this point any new Virtual Service will register its name and its allocated IP Address to the DNS Service dynamically as a A Record. Once the AVI configuration is done now it’s time to move to the next level and start deploying AKO in the k8s cluster.

.